sFlow Collector

What is an sFlow Collector?

sFLow (short for “sampled flow”) was originally developed by inMon to address the need for a common, universal standard of export for Internet Protocol (IP) flow information from switches, routers, probes and other network devices. Flow-based network monitoring collects information about packet flows (a sequence of related packets) as they traverse routers, switches, load balancers, ADCs, and other network devices, for monitoring and analysis of various network metrics.

sFlow is packet sampling protocol (as opposed to NetFlow, which is a session sampling flow protocol) that exports a statistical sampling of individual packet headers, along with the first 64 or 128 bytes of the packet payload. sFlow can also export additional metrics derived from time-based sampling of network interfaces. You can learn more about the differences between sFlow and NetFlow here.

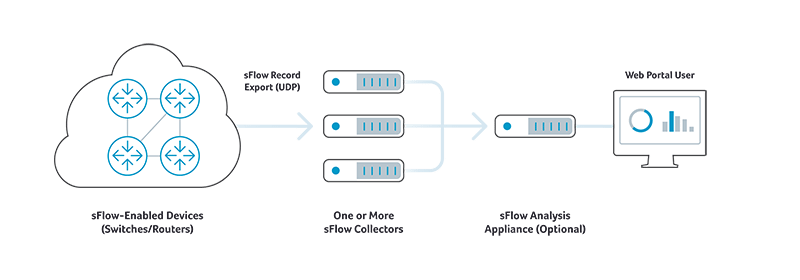

An sFlow collector is one of three typical functional components used for sFlow analysis:

- sFlow Exporter: an sFlow-enabled router, switch, probe or host software agent that samples one out of n packets on a random basis. Once sampled, the sFlow exporter generates UDP-based flow records and sends them to an sFlow collector.

- sFlow Collector: an application responsible for receiving sFlow record packets, ingesting the data from the flow records, pre-processing and storing flow records from one or more flow exporters.

- sFlow Analyzer: a software application that provides tabular, graphical and other tools and visualizations to enable network operators and engineers to analyze flow data for various use cases, including network performance monitoring , network troubleshooting, and network capacity planning.

Main Functions of an sFlow Collector

An sFlow collector’s main functions include:

- Ingesting flow UDP datagrams sent from one or more sFlow exporters

- Unpacking binary flow data into text/numeric formats

- Performing data volume reduction through selective filtering and aggregation

- Storing resulting sFlow data in flat files or SQL database for post-processing by sFlow Analyzer applications

- Synchronizing flow data to the sFlow Analysis Application running on a separate computing resource

sFlow Collector and Analyzer applications are two functions of an sFlow analysis system or product. In some cases, the sFlow analysis product implements both functions on the same server. This is appropriate when the volume of flow data being generated by exporters is relatively low and localized. In cases where flow data generation is high or where sources are geographically dispersed, the collector function can be run on separate and geographically distributed servers (such as rackmount server appliances). In these cases, collectors then synchronize their data to a centralized analyzer server.

Using sFlow Collectors to Monitor Network Traffic and Identify Bandwidth Issues

The network flow data sent to an sFlow collector can be used in a wide variety of network monitoring, application monitoring, network planning, network troubleshooting and network security applications, including:

- Providing visibility into network and bandwidth usage by applications and users

- LAN/WAN and SD-WAN traffic measurement

- Troubleshooting and diagnosing network problems

- Detecting anomalous network traffic and illicit network usage (as in DDoS attacks)

- Measuring and monitoring quality-of-service or other key performance indicators and ensuring adherence to SLAs (service-level agreements).

Approaches to sFlow Collection

Historically, the most common way to run sFlow collectors was on a physical, rackmounted Intel-based server running a Linux OS variant. More recently, flow collectors have been deployed on virtual machines. Unfortunately, in either case, compute and storage has severely limited the amount of detailed data that could be retained or analyzed.

Most recently, a unified, cloud-scale approach to sFlow collector and analyzer architecture has emerged. In this architecture, a horizontally scalable big data system replaces physical or virtual collector and analyzer appliances. Big data systems allow for dramatically high volumes of ingest, greater data retention, deeper analytics and more powerful anomaly detection.

About Kentik’s sFlow Collection Features

Kentik offers the industry’s only big data-based, SaaS network observability solution that integrates network agent performance metrics with billions of NetFlow, sFlow, IPFIX, cloud flow log, and BGP records matched with geolocation and other forms of enrichment data. Kentik’s solution also incorporates synthetic monitoring features that allow for proactive monitoring of all types of networks.

Start a free trial to try it yourself or request a personalized demo.