Big Data IPFIX Collectors

IPFIX: An Overview of Big Data IPFIX Collectors

IPFIX is an IETF standards-based protocol that is used to record statistical, infrastructure, routing and other information about IP traffic flows traversing an IPFIX-enabled router or switch. An IPFIX collector is one of three typical functional components used for IPFIX analysis:

- IPFIX Exporter: an IPFIX-enabled router, switch, probe or host software agent that tracks key statistics and other information about IP packet flows and generates flow records that are encapsulated in UDP and sent to a flow collector.

- IPFIX Collector: an application responsible for receiving flow record packets, ingesting the data from the flow records, pre-processing and storing flow record from one or more flow exporters.

- IPFIX Analyzer: a software application that provides tabular, graphical and other tools and visualizations to enable network operators and engineers to analyze flow data for various use cases, including network performance monitoring, troubleshooting, and capacity planning.

IPFIX Collector Deployment Models

There are multiple deployment models for utilizing IPFIX collectors. The first model runs the IPFIX collector application on dedicated hardware-based computing resources—typically a rackmount server appliance. This model is the most constrained because it requires deployment of hardware to scale as flow record volume increases.

The second model is virtualized based where IPFIX collectors are deployed as dedicated virtualized versions of classic IPFIX collector appliances. The virtual IPFIX collector adds greater deployment flexibility by allowing collectors to be deployed either in private or cloud-based, virtualized servers. It also allows for spin up of collectors on-demand, though in the vast majority of use cases, flow record volume is generally constant, so capacity planning for IPFIX does not usually require bursting of incremental collectors.

One key similarity between both physical and virtual IPFIX collectors is that they are generally designed in a monolithic fashion, which restricts their scalability and functional range. IPFIX collectors must;

- Ingest flow UDP datagrams from one or more IPFIX-enabled devices

- Unpack binary flow data into text/numeric formats

- Store resulting data in per-appliance flat files or SQL database instance

- Synchronize flow data to the IPFIX analyzer application running on a separate computing resource

This monolithic design places severe constraints on how appliances can deal with high volumes of flow data. As a result, selected data from the raw flows is rolled up into a number of summary tables. Raw flow is retained for a short window and then discarded, both in order to save storage, but also because with a single appliance’s compute power, only a small amount of raw data can be post-processed at any time.

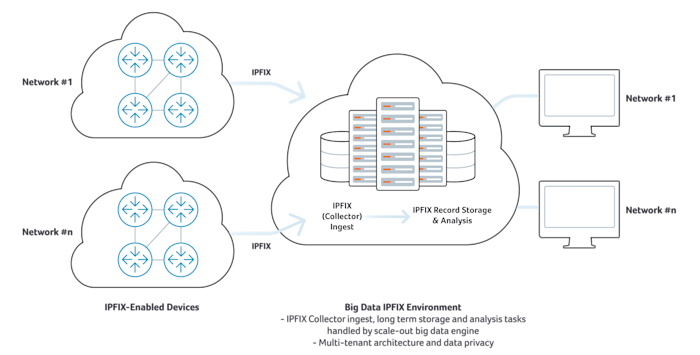

Big Data IPFIX Collectors: Designed Differently

A big data IPFIX collector takes a different architectural approach. In this model, clusters of computing and storage resources can be scaled-out for different purposes. For example, a big data platform can allocate a scale-out cluster just to ingest and pre-process flow data in a way that preserves all raw flow fields. Rather than appliance by appliance local storage, a separate storage cluster can be used, which allows for deep retention of raw records. A separate cluster can be used to perform queries against the storage layer on behalf of a GUI or API calls. The big data approach ensures that capacity can be scaled flexibly to meet stringent performance requirements for queries against large-scale data sets, even as flow record ingest volumes and analysis query rates grow significantly.

Big data architectures can be built on a variety of open source software platforms such as Hadoop, ELK (Elastic, Logstash, Kibana) and other stacks. There is a major difference between a big data IPFIX collector and analysis built for real-time/operational versus post-process/planning-only use cases. Operational use of IPFIX data requires both high scale and low latency at all functional points: ingest scale, time to query, and query response. Planning-only use cases may not require low latency at any functional points of the collection and analysis/query stages.

Big data IPFIX collectors and analysis engines can be built for single- or multi-tenant use. Most open source big data platforms are built as single tenant engines, whereas SaaS big data engines requires multi-tenancy.

Big Data IPFIX collectors are designed to meet the scale, flexibility and response time needs of network operators and planners. Kentik offers the industry’s only SaaS-based, big data NetFlow, IPFIX and sFlow analysis solution built for network operations speed and scale. To learn more about Kentik Detect, download the Kentik Detect overview white paper or visit the Kentik Detect product overview.