Unlocking the Power of Embedded CDNs: A Comprehensive Guide to Deployment Scenarios and Optimal Use Cases

Summary

This guide explores the benefits of embedded caching for ISPs and discusses deployment optimization strategies and future trends in CDN technology. Embedded CDNs help reduce network congestion, save costs, and improve user experiences. ISPs must carefully plan their deployment strategies by considering how each of the CDNs distributes content and directs end-users to the caches. They need to know both the CDNs and their network architecture in detail to build a successful solution.

In today’s fast-paced digital world, content distribution networks (CDNs) and embedded caching play a vital role in delivering a high-quality user experience. In this post, we will explore the benefits of embedded caching for internet service providers (ISPs) and discuss various strategies for optimizing the deployment of embedded caches in their networks. Additionally, we will take a closer look at the future developments and trends in CDN technology to provide a comprehensive understanding of this dynamic industry.

Previously we wrote about how embedded CDNs work. In this post, we will dive deeper into when it makes sense for ISPs to deploy embedded caches, the potential benefits, and how you can optimize the deployment to your network.

What is a CDN?

Content distribution networks are a type of network that emerged in the 90s, early in the internet’s history, when content on the internet grew “richer” – moving from text to images and video. Akamai was one of the first CDNs and remains a strong player in today’s market.

A high-level definition of a CDN is:

- A collection of geographically distributed caches

- A method to place content on the caches

- A technique to steer end-users to the closest caches

The purpose of a CDN is to place the data-heavy or latency-sensitive content as close to the content consumers as possible – in whatever sense close means. (More on that later.)

What is embedding?

The need to have the content close to the content consumers fostered the idea of placing caches belonging to the CDN inside the access network’s border. This idea was novel and still challenges the mindset of network operators today.

We call such caches embedded caches. They are usually intended only to serve end users in that network. These caches often use address space originating in the ISP’s ASN, not the CDN.

Why do CDNs offer this to ISPs?

There are several reasons for a CDN to offer embedded caches to ISPs. For one, it does bring the content closer to the end users, which increases the quality of the product the CDN provides their customers. This is just the CDN idea taken a bit further.

Secondly, offering ISPs caches can strengthen the relationship between the CDN and the ISP and foster collaboration that can lead to service improvements for both parties. It is not a secret that the traffic volumes served by the CDNs can be a burden on the network, and the embedded solution can be viewed as a way for the CDNs to play their part in the supply chain of delivering over-the-top services instead of paying the ISPs for the traffic.

Advanced edge optimization strategies for network engineers, planners, and peering pros

Sometimes there is a perception that the embedded solution is about saving money for space and power needed to host the servers, but this is mostly a misunderstanding. It is far more efficient for most CDNs to operate large clusters in fewer locations than to operate a large number of small clusters.

So, what is in it for the ISP?

In general, the ISP also benefits from bringing the content closer to the end users. Still, the question is whether bringing it inside their network edge provides enough benefit to justify the extra cost of hosting and operating the CDN caches.

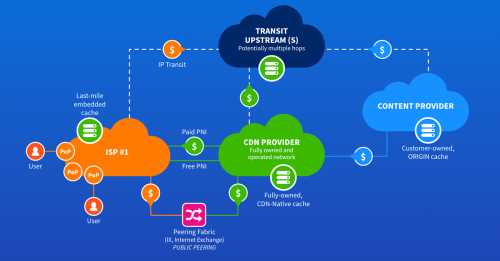

Let’s look at how end-user ISPs and content reach each other. In this diagram, we show where money typically flows between the parties and where traffic flows. Let’s explore when there is a benefit for the ISP to deploy embedded caches.

- The CDN traffic is running on the ISP’s transit connections, and peering is not possible for some reason or another.

- The CDN and the ISP peers over an IXP, the volume drives port upgrades, and private connections are impossible.

- The CDN and the ISP peer, but in a limited number of locations that are not close to a large number of consumers of the content from the CDN, so the traffic travels a long distance internally in the ISP’s network.

The first two scenarios are straightforward. Removing traffic from the edge will save money on transit and IXP port fees and lower the risk of congestion. The business case can directly compare the saved cost and the estimated cost of space and power for the embedded servers. More on what to consider when building the business case later.

Let’s have a look at the third case. We can roughly split that into two different issues.

The first issue: The peering and transit sites are far away from the majority of the end users, and there is a high cost of building the connectivity to those sites. For example, there is the cost of transport capacity or dark fiber and housing on top of the interface-related costs. This case is similar to 1 and 2 above, concerning working out whether a deployment makes sense or not.

The second issue: End users in the network are spread out, and the traffic is causing a heavy load on the ISP’s internal backbone. This one is trickier to evaluate.

But how about we just put a cache at each location where the end users are terminated and be done with it?

Challenges with a distributed deployment of embedded caches

Unfortunately, this might work for some CDNs, but traffic will usually flow on the backbone links since the end-user mapping is most likely not granular enough to support a super-distributed deployment.

This is due to how end users are directed to the closest cache or how the closest cache is defined in the CDN. And guess what? They do not do that the same way 🙂.

We touched on this in our earlier blog post, but let’s look at potential solutions to challenges the end-user mappings create for distributed deployments of embedded caches.

Getting end users to the closest cache using BGP

Open Connect from Netflix stands out from most of the other players in this matter. They heavily rely on BGP (Border Gateway Protocol, the protocol that networks use to exchange routes) to define which cache an end user is directed to.

A little about BGP:

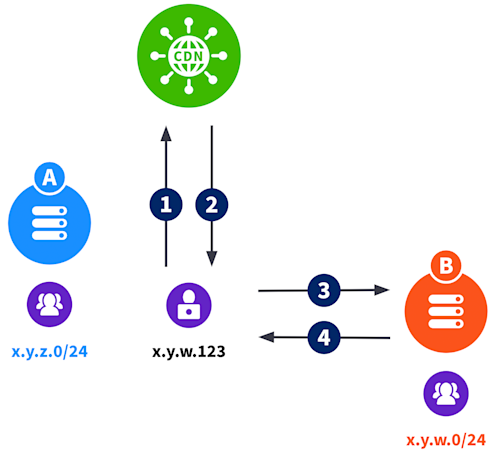

- BGP is used to signal to a cache which IP ranges it should serve

- A setup where all caches have identical announcements will work for most deployments since the next tie-breaker is the geolocation information for the end user’s IP address.

- Prefixes are sent from the ISP to the caches.

• x.y.z.0/24 is announced to A

• x.y.w.0/24 is announced to B

1. Give me movie

2. Go to B and get movie

3. Give me movie

4. Movie

Open Connect is unique among the CDNs since they do not rely on the DNS system to direct the end user to the suitable cache. The request to play a movie is made to the CDN, which replies with an IP address for the cache that will serve the movie without using the DNS system.

Some of the other CDNs will also use communities tagged to the routes from the ISP to map end users to clusters.

The BGP method works well when the ISP has a regionalized IP address plan such that each region uses one set of prefixes, and another region accepts a different set, which can be announced to the CDN. However, this is not always possible – sometimes, the entity that deploys the caches does not have control over the address plan for the access network.

Some ISPs who decided to go all-in on embedded caches and built a large number of locations for caches solved this problem by implementing centralized route servers for the BGP sessions to the caches. These route servers communicate with a back-end system that keeps track of which IP addresses are in use where, such that the correct IP addresses are announced to the suitable clusters (or tagged with the right community). Interestingly, the benefit of a distributed deployment in these extensive networks justifies the investment in developing and maintaining this system.

DNS server dependency

Most CDNs map end users to a cache by mapping the end user’s DNS server to request the content to a cache or a cache location.

This means that if an ISP wants to deploy embedded caches from such a CDN, they must also dedicate DNS servers to each region they will divide their network into.

There are more efficient ways of operating DNS resolvers, so are there any workarounds available?

Many embedded CDNs support eDNS0 Client Subnet (ECS) – an optional extension to the DNS protocol, published in RFC7871. If an ISP enables ECS on their DNS resolvers, the DNS request will contain information about the request’s source – the end user’s IP address. This way, the CDN can use the IP addresses to map the end user to a cache server location, providing a more granular mapping than when based on the DNS server.

When enabling ECS, the method of mapping the end user to a cluster varies from clean geolocation to a mix of geolocation, QoS measurements of the prefixes of the ISP, and the method mentioned above.

If end users decide to use one of the publicly available DNS services instead of the one provided by their ISP, this might mess up the end-user mapping. 8.8.8.8 supports ECS, but 1.1.1.1 is a service created to preserve privacy, so here, ECS is only supported for debugging purposes.

Now we have determined where to offload traffic and whether our network supports a widely distributed deployment or if a centralized deployment makes better sense. The next thing to investigate is how much traffic will be moved to the embedded solution.

Building the business case

The first step is to measure the total traffic to each potential region where a cache cluster could make sense. A good flow tool like Kentik is crucial unless the CDN is able and willing to help you with the detailed analysis. In our blog, The peering coordinator’s toolbox part 2, we discussed how to use Kentik to analyze the traffic going to different regions in your network.

In our earlier blog about embedded CDNs, we described how the different methods of distributing content to the caches determine the offload/hit rate. CDNs such as Netflix’s Open Connect, which determines the required file locations beforehand, can distribute content to caches during periods of low demand. In contrast, pull-based CDNs distribute content to caches when demand is high. Both types of CDNs have a long tail of content that will never be cached on the embedded caches. So there is no general rule on how to calculate this.

However, content CDNs built to serve a single content service have a very high offload, where the commercial CDNs are very dependent on the variations of demand, and the mix of content in each ISP means there is no general offload number that can be achieved. A common denominator is that the higher demand for the content, the better offload will result from a deployment. Higher demand means the need for bandwidth will justify more servers, which again means more disk space will be available to cache more content.

However, the lack of a general calculation means the best way to make this business case is in close dialogue with the CDNs you are considering embedding.

Future developments and trends

As technology advances, CDNs and embedded caching are evolving to meet the growing demands of internet users. Some future developments and trends to watch for include:

- Edge computing: With the rise of edge computing, CDNs are expected to extend their networks further, bringing content closer to users by deploying caches at the edge of the network. This will help reduce latency and improve the user experience, particularly for latency-sensitive gaming and virtual reality applications.

- Artificial intelligence (AI) and machine learning (ML): AI and ML technologies are expected to optimize CDN operations significantly. These technologies can help predict traffic patterns, automate cache placement decisions, and improve content delivery algorithms, leading to more efficient and cost-effective CDN operations.

- 5G and IoT: The widespread adoption of 5G and the Internet of Things (IoT) will increase connected devices and data traffic. CDNs must adapt and scale their infrastructure to accommodate this growth, including deploying embedded caches to manage the increased traffic and maintain high-quality content delivery.

As we have seen more CDNs over the past year offer embedded solutions, it seems likely that even more will decide to move in that direction to support the new use cases.

Conclusion

In summary, embedded caching offers numerous benefits to ISPs, including reduced network congestion, cost savings, and improved user experiences. To fully harness these benefits, ISPs must carefully plan and optimize their deployment strategies while staying informed of future developments and trends in CDN technology. By doing so, ISPs can ensure they are well prepared to meet the growing demands of their users and thrive in the ever-evolving digital landscape.