Latency vs Throughput vs Bandwidth: Unraveling the Complexities of Network Speed

As enterprise and internet service provider networks become more complex and users increasingly rely on seamless connectivity, understanding and optimizing the speed of data transfers within a network has become crucial. Latency, throughput, and bandwidth are key network performance metrics that provide insights into a network’s “speed.” Yet, these terms often lead to confusion as they are intricately related but fundamentally different. This article will shed light on these concepts and delve into their interconnected dynamics.

Network Speed: Three Important Metrics

Network speed is often a blanket term for how fast data can travel through a network. However, it is not a singular, one-dimensional attribute but a combination of several factors. Three primary metrics defining network speed are latency, throughput, and bandwidth:

Latency

Latency refers to the time it takes for a packet of data to traverse from one point in the network to another. It is a measure of delay experienced by a packet, indicating the speed at which data travels across the network. Latency is often measured as round-trip time, which includes the time for a packet to travel from its source to its destination and for the source to receive a response. A network with low latency experiences less delay, thereby seeming faster to the end user.

Throughput

Throughput, on the other hand, is the amount of data that successfully travels through the network over a specified period. It reflects the network’s capacity to handle data transfer, often seen as the actual speed of the network. Throughput depends on several factors, including the network’s physical infrastructure, the number of concurrent users, and the type of data being transferred.

Bandwidth

Bandwidth is the maximum data transfer capacity of a network. It defines the theoretical limit of data that can be transmitted over the network in a given time. Bandwidth is often compared to the width of a road, determining the maximum number of vehicles (or data packets in a network) that can travel simultaneously.

The Relationship Between Latency, Throughput, and Bandwidth

While latency, throughput, and bandwidth are distinct concepts, their interrelationships are pivotal in understanding network speed. They are often viewed together to assess a network’s performance.

Although maximum bandwidth indicates the highest possible data transfer capacity, it doesn’t necessarily translate to the speed at which data moves in the network. That’s where throughput comes in — it reflects the actual data transfer rate.

Latency is another crucial factor that impacts the speed at which data is delivered, regardless of throughput. High latency can slow down data delivery even on a high-throughput network because data packets take longer to reach their destination. Conversely, lower latency allows data to reach its destination more quickly, making the network feel faster to users even if the throughput is not exceptionally high.

In certain scenarios, latency and throughput can exhibit an inversely proportional relationship. For example, a network that’s optimized for high throughput might achieve this by processing data more efficiently, thus reducing latency. However, it’s important to understand that this isn’t a strict relationship. There are instances where a network might have high throughput but also high latency, and vice versa.

This relationship can be complicated by various factors. For example, a network with high bandwidth can still experience low throughput if the latency is high due to network congestion, inefficient routing, or physical distance. Likewise, a network with high throughput might still deliver a poor user experience if the network suffers from high latency.

Understanding the distinctions and connections between latency, throughput, and bandwidth is essential in measuring, monitoring, and optimizing network speed. In the subsequent sections, we’ll delve deeper into these concepts and explore some of the ways network professionals can effectively manage them using Kentik’s network observability platform.

What is Bandwidth?

Bandwidth is a fundamental element of network performance, representing a network’s maximum data transfer capacity. Imagine it as a highway: the wider it is, the more vehicles (or data packets) it can accommodate simultaneously. High bandwidth indicates a broader channel, allowing a greater volume of data to be transmitted at once, leading to potentially faster network speeds.

Bandwidth is typically measured in bits per second (bps), with modern networks often operating at gigabits per second (Gbps) or even terabits per second (Tbps) scales. This measurement describes the volume of data that can theoretically pass through the network in a given time frame.

However, merely having high bandwidth doesn’t ensure optimal network performance — it’s the potential capacity, not always the realized speed. Factors like network congestion, interference, or faulty equipment can reduce the effective bandwidth below the maximum. That’s where bandwidth monitoring becomes essential. NetOps professionals can preemptively address potential issues by tracking bandwidth usage, ensuring that actual data transfer rates don’t lag behind the available bandwidth.

What is Latency?

Latency is a critical aspect of network performance, representing the delay experienced by data packets as they travel from one point in a network to another. It is the time it takes for a message or packet of data to go from the source to the destination and, in some cases, back again (known as round-trip time). Latency significantly impacts the user’s perception of network speed; a network with lower latency often feels faster and provides a smoother, more responsive user experience. This is crucial for real-time applications like video conferencing, gaming, and live streaming.

What Causes Network Latency?

Several factors can influence latency, including:

- Distance: The physical distance between the source and destination significantly affects latency. Data packets travel through various mediums (e.g., cables), and the farther they have to go, the more time it takes.

- Network congestion: Congestion in a network can cause delays and increase latency, akin to how traffic congestion slows down vehicle movement.

- Hardware and infrastructure: The performance and efficiency of network hardware, such as routers and switches, as well as the quality of the network infrastructure, can impact latency.

- Transmission mediums: The medium of data transmission, whether it’s fiber optic cables, copper cables, or wireless signals, also influences latency (e.g., fiber optic cables typically offer lower latency than other mediums).

- Number of hops: Each time a data packet passes from one network point to another, it’s considered a “hop.” More hops mean that data packets have more stops to make before reaching their destination, which can increase latency. The number of hops a packet makes can change based on the network’s configuration, topology, and path chosen for data transfer.

How is Latency Measured, Tested and Monitored?

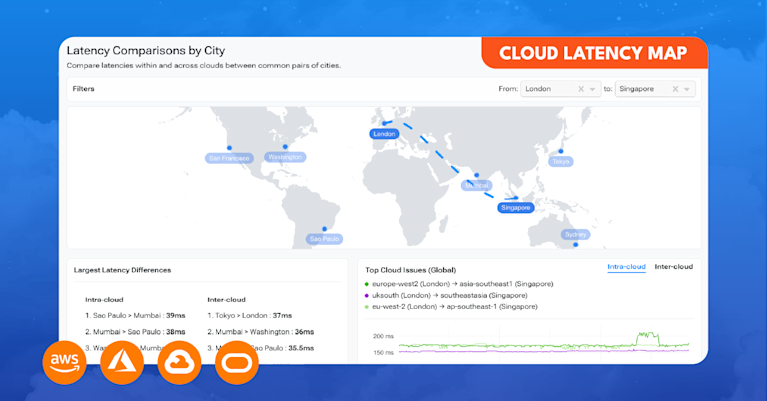

Latency is typically measured in milliseconds (ms) and can be evaluated using methods like ping and traceroute tests. These tests send a message to a destination and measure the time it takes to receive a response.

Advanced network performance platforms like Kentik Synthetics provide robust tools for continuous latency testing and monitoring. Through continuous ping and traceroute tests performed with software agents located globally, Kentik Synthetics measures essential metrics such as latency, jitter, and packet loss. These metrics are pivotal for evaluating network health and performance.

Kentik Synthetics’ approach to latency evaluation involves comparing current latency values with baseline values, representing the average of the prior 15 time slices (with time slice value depending on the selected time range being observed). The system uses this information to assign latency a status level — Healthy, Warning, or Critical — based on how far it deviates from the baseline. This approach enables prompt identification and remediation of latency issues before they substantially impact network performance or user experience.

Kentik Synthetics also offers insights such as “Latency Hop Count” which analyzes changes in the hop count between the source agent and the test target and its relation to latency. This type of analysis can help identify the key factors contributing to poor network performance. Network professionals can rapidly identify and address issues that lead to increased latency by visualizing the relationship between test latency and the number of hops.

What is Throughput?

Throughput measures how much data can be transferred from one location to another in a given amount of time. In the context of networks, it’s the rate at which data packets are successfully delivered over a network connection. It is often measured in bits per second (bps), kilobits per second (Kbps), megabits per second (Mbps), or gigabits per second (Gbps). High throughput means more data can be transferred in less time, contributing to better network performance and a smoother user experience.

Several factors can influence throughput, including:

- Bandwidth: Bandwidth is the maximum capacity of a network connection to transfer data. Higher bandwidth typically enables higher throughput. However, bandwidth is only a potential rate, and the actual throughput could be lower due to other factors.

- Latency: Higher network latency can reduce throughput because it takes longer for data packets to travel across the network.

- Packet loss: When data packets fail to reach their destination, the sending device often needs to resend them, which can lower the overall throughput.

- Network congestion: When many data packets are trying to travel over the network simultaneously, it can lead to congestion and a decrease in throughput.

- Hardware and infrastructure: The performance and efficiency of network hardware, such as routers and switches, can impact throughput.

Why is Throughput Important?

Understanding throughput is crucial for managing network performance and capacity planning. If the throughput of a network connection frequently reaches its maximum capacity, it may cause network congestion and reduce the quality of the user experience. Monitoring throughput over time helps network professionals identify when it’s time to upgrade their network infrastructure.

In the realm of network capacity planning, throughput is closely related to the metric of utilization. Utilization represents the ratio of the current network traffic volume to the total available bandwidth on a network interface, typically expressed as a percentage. It’s an essential indicator of how much of the available capacity is being used. High utilization may lead to network bottlenecks and poor performance, highlighting the need for capacity upgrades or better traffic management.

Tools like Kentik’s network capacity planning tool offer a comprehensive overview of key metrics such as utilization and runout (an estimate of when the traffic volume on an interface will exceed its available bandwidth). These insights can help organizations to foresee future capacity needs, mitigate congestion, and maintain high-performance networks.

Latency vs Throughput: What’s the Difference?

While both latency and throughput are key metrics for measuring network performance, they represent different aspects of how data moves through a network.

Latency is about time: it measures the delay experienced by a data packet as it travels from one point in a network to another. Lower latency means data packets reach their destination more quickly, which is crucial for real-time applications like video calls and online gaming. In these contexts, even a slight increase in latency can lead to a noticeable decrease in performance and user experience.

On the other hand, throughput is about volume: it measures the amount of data that can be transferred from one location to another in a given amount of time. Higher throughput means more data can be transferred in less time, contributing to faster file downloads, smoother video streaming, and better overall network performance.

While these two concepts are related — high latency can reduce throughput by slowing down data transmission, and high throughput can sometimes reduce latency by processing more data packets simultaneously — they don’t always correlate perfectly. A network can have low latency but also low throughput if its infrastructure cannot handle a large volume of data, just as it can have high throughput but also high latency if data packets take a long time to reach their destination due to factors such as network congestion, number of hops, or physical distance.

Understanding the difference between latency and throughput — and how they can impact each other — is crucial for network performance optimization. NetOps professionals must monitor both metrics to ensure they deliver the best possible service. For example, a network that primarily serves real-time applications may prioritize minimizing latency, while a network that handles large file transfers may prioritize maximizing throughput.

Throughput vs Bandwidth: Theoretical Packet Delivery vs Actual Packet Delivery

In the context of network performance, throughput and bandwidth are terms that are often misunderstood and used interchangeably. However, they represent different aspects of a network’s data handling capacity.

As mentioned earlier, bandwidth is the maximum theoretical data transfer capacity of a network. It’s like the width of a highway; a wider highway can potentially accommodate more vehicles at the same time. However, just because a highway has six lanes doesn’t mean it’s always filled to capacity or that traffic is flowing smoothly.

Throughput, on the other hand, is the actual rate at which data is successfully transmitted over the network, reflecting the volume of data that reaches its intended destination within a given timeframe. Returning to our highway analogy, throughput would be akin to the number of vehicles that reach their destination per hour. Various factors, like road conditions, traffic accidents, or construction work (analogous to network conditions, packet loss, or latency), can impact this number.

Throughput vs Bandwidth: Optimal Network Performance

In an ideal scenario, throughput would be equal to bandwidth, but real-world conditions often prevent this from being the case. Maximizing throughput up to the limit of available bandwidth while minimizing errors and latency is a primary goal in network optimization.

A network with ample bandwidth can still have low throughput if it suffers from high latency or packet loss. Therefore, for optimal network performance, both bandwidth (maximum transfer capacity) and throughput (actual network traffic volume) must be effectively managed and monitored.

Related Kentipedia Articles

- Understanding Latency, Packet Loss, and Jitter in Network Performance

- Network Performance Monitoring (NPM)

- Network Performance Monitoring Metrics

Using Kentik for Network Performance Optimization

Understanding the dynamics of latency, throughput, and bandwidth is crucial for managing and optimizing network performance. As we’ve seen, these metrics are interconnected, each affecting overall network speed and user experience. Whether you are a network professional troubleshooting performance issues, planning for future network capacity, or striving to deliver the best user experience, having a comprehensive view and understanding of these and other performance metrics is vital.

Kentik’s network observability platform empowers you with real-time, granular visibility into your network performance. It helps you understand your network’s health by measuring critical metrics such as latency, traffic volume, and utilization.

With Kentik Synthetics, you can continuously monitor network latency through regular ping and traceroute tests, enabling you to detect and address performance anomalies before they impact your users. Additionally, the platform provides valuable insights into the factors affecting latency, such as hop counts, helping you identify and resolve network inefficiencies.

Kentik’s network capacity planning tools help in understanding throughput and bandwidth of various network components. They provide a detailed view of network utilization, allowing you to prevent congestion, plan for future needs, and ensure that your network infrastructure can handle the required data volume effectively.

In a world where reliable and fast network performance is paramount, leveraging tools like Kentik can make the difference between smooth operations and performance bottlenecks. Experience Kentik’s capabilities firsthand by starting a free trial or request a personalized demo today.