Big Data Network Performance Monitoring

What is Big Data Network Performance Monitoring?

Network Performance Monitoring (NPM) refers to the process of measuring, diagnosing and optimizing the service quality of a network as experienced by users. Big Data NPM refers to the collection, retention and analysis of network performance monitoring data in a Big Data-scale repository, as opposed to traditional physical or virtual appliances.

NPM solutions have traditionally utilized an appliance deployment model. An appliance-based PCAP probe with one or more interfaces connects to router or switch span ports or to an intervening packet broker device (such as those offered by Gigamon or Ixia). The appliance records all packets passing across the interface into memory and then into longer-term storage. In virtualized datacenters, virtual probes may be used, but they are also dependent on network links in one form or another.

Physical and virtual appliances are costly from a hardware and (in the case of commercial solutions) software licensing point of view. As a result, in most cases, it is only fiscally feasible to deploy PCAP probes to a few, selected points in the network. In addition, the appliance deployment model was developed based on pre-cloud assumptions of centralized datacenters holding relatively monolithic application instances.

As cloud and distributed application models have proliferated, the appliance model for packet capture is less feasible, because in many cloud hosting environments, there is no way to deploy even a virtual appliance.

A cloud-friendly and highly scalable model for Network Performance Monitoring combines the deployment of lightweight, host-based monitoring agents that export PCAP-based statistics gathered on servers and open source proxy servers such as HAProxy and NGNIX. Exported statistics are sent to a Big Data repository that scales horizontally to store unsummarized data and provides powerful analytics for alerting, diagnostics and other use cases.

While it is possible to construct a Big Data repository for network performance monitoring data based on public cloud or open source platforms, it is costly from a development, operational and time-to-value point of view. SaaS Big Data options for network visibility speed time to value by including broader capabilities such as volumetric (multi-billion row dataset) traffic flow analysis, anomaly detection, DDoS detection, and peering analytics.

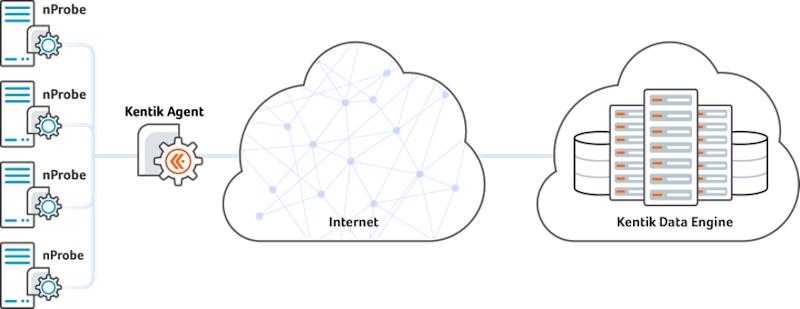

Kentik offers the industry’s only Big Data-based, SaaS NPM solution that integrates nProbe host agent performance metrics and billions of NetFlow, sFlow, IPFIX, BGP records matched with geolocation data. In the above diagram, performance monitoring software agents running on host computers send network performance monitoring data in flow records via an optional encrypting proxy agent. The proxy agent sends the flow data securely over the Internet to a Big Data Engine that ingests and stores this data for actionable analytics.