The Subtle Details of Livestreaming Prime Video with Embedded CDNs

Summary

Live sports have moved to the internet and are now streaming instead of being broadcast. Traditional streaming protocols have a built-in delay that challenges the experience of a live game. Amazon Prime has found a solution by combining a new protocol with a very distributed CDN.

It has been interesting to observe how professional sports have moved the transmission onto the internet instead of requiring their viewers to sit at home and watch their TV. Many still do that — the details of any sportsball game are best enjoyed on a big screen — but the games are no longer broadcast via the TV signal in the air or on the cable, but coming via the connection to the internet.

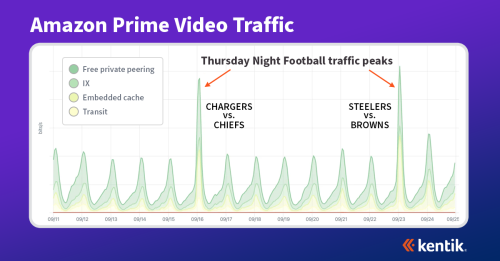

Amazon Prime Video is one of the services delivering a lot of sports — you might recall my colleague Doug Madory’s blog in 2022 about Thursday Night Football traffic, as seen in the Kentik data.

The challenges in transitioning to internet broadcasting

Before we dig into the Prime Video solution, what is the main challenge with moving live events from broadcast to the internet?

Flow TV (traditional television) broadcasts are transmitted using dedicated networks, such as over-the-air, cable, or satellite. In this case, the signal is sent once to all viewers, and multiple users watching the same channel do not create additional load on the network. This is because the broadcast signal is shared, and the network’s capacity is designed to handle the full audience without degradation of the signal.

Internet broadcasts rely on data packets transmitted over the internet. Each viewer receives a separate stream of data, which means that as more users watch a live event, the load on the network increases. This can lead to congestion, slower connection speeds, or buffering, particularly if the network does not have enough capacity to handle the increased demand.

Advanced edge optimization strategies for network engineers, planners, and peering pros

What is the impact of network congestion on live streaming?

So the main difference is that more viewers lead to a higher load on the network that carries the event. One consequence of the congestion that might result from the higher load is delay — which is nearly unacceptable for sportsball game events. I remember watching the World Cup in football (aka soccer for you Americans) in 2018 on the internet — in the summer with open windows. We got a good warning to pay attention the next couple of minutes when we heard the neighbors’ cheer. (My country’s team had a good run in that World Cup.)

However, this delay was not due to congestion but to the live-streaming technology needing to be more mature at the time.

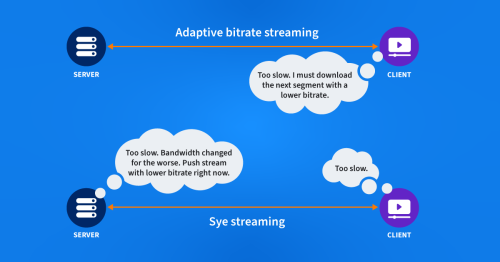

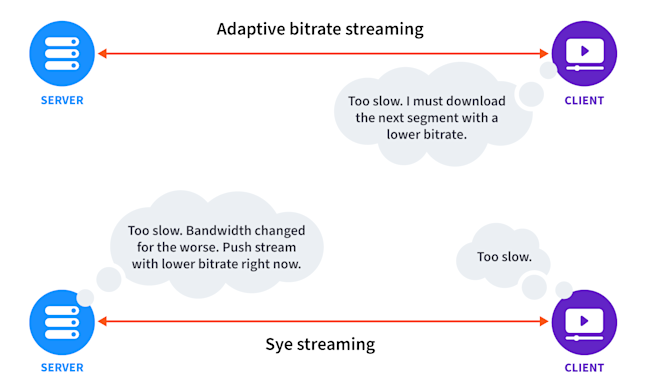

Understanding adaptive bitrate streaming and its limitations

The reason for the delay — or the unsynchronized delivery of the packets that make up the live stream — is that traditional adaptive streaming protocols are based on chopping the video up into small segments. These are then encoded in several different bitrates. The playback of the files is compared to the speed of the download of the segment, and if it is slower, the client will request the next segment with a lower bitrate and vice versa (until the maximum bitrate for the player is reached).

The primary advantage of ABS is its adaptability. It allows for a smooth viewing experience, reducing buffering and improving playback by adjusting the stream’s quality to match the viewer’s network conditions.

However, ABS can have issues with latency. Because it requires a certain amount of video to be buffered to switch between different quality levels, this can introduce a delay in live streaming scenarios, which can be problematic for real-time content like sports events or online gaming.

Sye: A solution for livestream latency and synchronization issues

Sye is a streaming technology developed by Net Insight. It’s designed to address one of the key issues with traditional live streaming: latency and synchronization.

Sye offers frame-accurate synchronization, meaning all viewers see the same frame simultaneously, no matter their device or network. This is particularly important for live sports or esports, where real-time performance is critical.

Another achievement is that Sye does not segment the streams but has developed a technique where the player can switch between streams with different bitrates on the fly to adapt to the varying network conditions. This switch happens and is decided on the server side, not the client.

Implementation of Sye by Amazon

Amazon acquired the technology from Net Insight in 2020 and has implemented Sye instances on all edge devices in Cloudfront, their own CDN. This means Prime Video has end-to-end control over the live streaming and can deliver a near real-time synchronized live-event experience for the end users, along with some other benefits of live streaming enhancements that the platform offers.

How do you address network congestion and enhance user experience?

With this technology, congestion is the biggest threat to a fully synchronized and high-quality end-user experience. The risk is also high — a really good game will attract even more viewers and not necessarily from other services like VOD running on the same infrastructure.

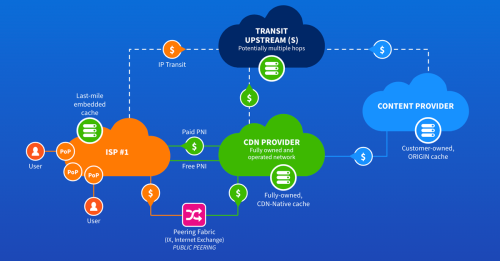

One way of trying to mitigate this is to deploy CDN servers as close as possible to the end users, and AWS is, in this respect, following the footsteps of other CDNs by offering ISPs the ability to embed servers from Cloudfront into their network, as we have described in two different blogs posts:

- Speeding Up the Web: A Comprehensive Guide to Content Delivery Networks and Embedded Caching

- Unlocking the Power of Embedded CDNs: A Comprehensive Guide to Deployment Scenarios and Optimal Use Cases

Understanding CDN mapping and ensuring system resilience

So what do you specifically have to have in mind when working out how to best deploy embedded edge appliances from AWS to support live video from Prime Video?

- The end user to embedded server cluster mapping

- Failure scenarios and how to secure that the event can run smoothly in the case of failures in the network.

In the recent blog posts, we detailed the most common ways CDNs map end users to the correct cache location. AWS has a similar system that, initially, when play is pressed in the player, sets up a session with the proper cache taking information like location and load on the caches into account.

As we discussed in the previous posts, location is defined by the location of the DNS resolver used by the end user or the end user’s IP address. In the latter case, it is signaled using BGP by the ISP, which caches should be prioritized for end users in which IP prefixes. Once the session between the player and the cache is up, the cache will push a stream to the player based on the bandwidth estimation between the cache and the player, so the experience is always as good as possible. Quality data is also continuously sent to the Sye control system and compared. If the quality from another cache to end users in that same area is better, the stream is switched.

Pushing the caches to many smaller locations has an often overlooked side effect when looking at the system’s resilience. Imagine a large ISP that connects to AWS with PNI peering with one connection in two locations — the ones where both networks are present. If there is a fault in one of the locations, the second PNI should be able to handle all the failover traffic. If it is not, not only the users who the faulty PNI serves, but all users will experience the consequences of the fault. This means we must build 100% extra capacity to handle one fault.

If, instead, the traffic is served from 10 smaller embedded locations and there is an outage on one of these, then only 1/10th of the total traffic is affected, and only 1/10th of the total traffic needs to be distributed to the functioning nine locations. This means the total demand for spare capacity to keep a resilient installation is much smaller.

Getting started with Amazon’s peering and ISP relationships team

So how do you get started?

First, understand how the traffic flows within your network. When you want to build several sites, you need to know how much traffic the customer base for each site will demand. As we discussed earlier, dividing your customers into groups must be consistent with how Amazon maps customers to cache locations, so either you identify the groups by their IP addresses or DNS resolvers. In The Peering Coordinators’ Toolbox - Part 2, we show an example of how you can use this grouping to analyze the traffic with a NetFlow-based tool like Kentik.

Use this information to reach out to the team at Amazon that handles peering and ISP relationships, and they will work with you to create the best solution.

If you want to learn more about Sye and the live streaming for NFL Thursday night football, check out these videos: Sye - Live Streaming and AWS re:Invent 2022 - How Prime Video delivers NFL’s Thursday Night Football globally on AWS.