Big Data J-Flow Analysis

Overview of Big Data J-Flow Analysis

Choosing the right big data engine for J-Flow data analysis can be a challenge given the many alternatives that are offered in the market. In order to assess various big data alternatives the following key requirements need to be considered that have a high correlation to J-Flow analysis needs:

- Ingest scalability– Very high volume of inbound data capture must be available, on the order of multiple millions of data points per second.

- Retention scalability - In order to support maximum flexibility for analysis, it is necessary to be able to retain all raw incoming data for months at a time.

- Reliability – High availability (HA) and high reliability, with internal balancing and rate-limiting are essential design points for ensuring system integrity.

- Real-time – Support for continuous review and sub-minute alerting against inbound data is needed if the data store is to be used for supporting live operations and effective monitoring/troubleshooting.

- Easy-to-use Interface– A fast intuitive interface for accessing data and analyses must minimally meet or exceed typical human reaction time.

- Responsive, Flexible Queries – To unlock the value of big data, rapid response to data exploration queries across huge volumes of data is essential for supporting efficient and effective analysis work processes. Query flexibility demands multi-dimensional, ad-hoc traffic analysis with instant filtering that delivers answers in seconds.

- Simple deployment – Ease of deployment and configuration, without extensive customization services, is highly desirable, so that time to value is kept to an absolute minimum.

- Multi-tenant – Multi-tenancy where data and activity views can be cleanly separated within the system and support access controls. Additionally, system response must be designed to support fairness, so no individual operator/user/customer can cause system performance impact upon any other.

- Open access – Systems must be able to share data securely with other existing internal and third party systems via simple, standards-based APIs and client interfaces.

Big Data Technology Options

There are multiple big data technologies on which network managers can build J-Flow analysis functionality, including Hadoop, ELK, and Google BigQuery.

Hadoop: Hadoop is an Apache open source software framework written in Java that allows distributed processing of large datasets across computer clusters supporting various programming models and tools. It’s composed of two parts: data storage and data processing. The distributed storage part is handled by the Hadoop Distributed File System (HDFS). The distributed processing part is achieved via MapReduce. Hadoop is licensed under the Apache License 2.0.

When evaluating Hadoop against the key J-Flow analysis requirements list it falls short in multiple ways. The first is that it does not have any tools that help with data modeling that support the data analysis required when processing J-Flow records. Some vendors have implemented cubes to fill this gap, enabling a dataset to be depicted in a multi-dimensional manner in a cube format, for slicing and dicing, to see more granular detail. Cubes first rose to prominence in the 1990s, so they can be seen as one of the first pervasive forms of analytical data modeling.

Cubes fall short for very large volumes of data and when the data model needs to change in real-time, which is typical in J-Flow analysis applications, affecting the overall responsiveness of the solution. In general, the Hadoop stack was designed for batch processing, and is inappropriate for network operations use cases that require real-time response.

ELK: The ELK stack is a set of open source analytics tools. ELK is an acronym for a collection of three open-source products: Elasticsearch, Logstash, and Kibana. Elasticsearch is a NoSQL database that is based on the Apache Lucerne search engine. Logstash is a log pipeline tool that accepts inputs from various sources, executes various transformations, and exports the data to various targets. Kibana is a visualization layer that works on top of Elasticsearch.

When evaluating the ELK stack against the key J-Flow analysis requirements list it falls short in several areas such as:

- No binary data can be stored. This results in all binary data (such as NetFow data) having to be reformatted as JSON, which is highly inefficient and leads to massive storage bloat at scale.

- Incomplete multi-tenancy. The ELK stack can use tags to implement data access segmentation, but there is no way to enforce fairness, so one user can (intentionally or unintentionally) run a massive query, consume more than their fair share of back end resources, and make everyone else wait.

Google BigQuery: BigQuery is a RESTful web service that enables interactive analysis of large datasets working in conjunction with Google Storage. It is an Infrastructure as a Service (IaaS) offered by Google. When evaluating BigQuery against the key J-Flow analysis requirements list its data throughput volumes falls short at 100K records per second given that a large operator network can generate tens of millions of J-Flow records per second.

A New Approach to Big Data J-Flow Analysis

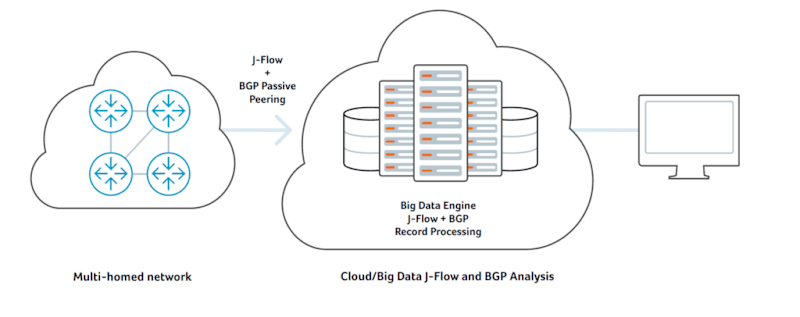

In order to meet all the above requirements for a J-Flow big data back-end, Kentik chose to create a purpose-built big data engine that leverages the following key elements, all of which are critical to a successful implementation:

- A clustered ingest layer to receive and process flow data. The data is unified, augmented, stored and indexed into a second cluster of data storage nodes. Data is stored in columnar format, sliced by time, for months to years, and also to a set of in-RAM machines for hyper-rapid access to the most recent data.

- A front end/API that uses an industry-standard language. All front end queries used by the UI portal and/or using an client APIs are based on PostgreSQL that are parsed by a cluster of Postgres servers and passed to the data engine, which breaks up each query by time and target, checks a persistent subquery cache, doles out sub-queries to the backend nodes that have the data, and combines/returns the results.

- A scale-out metadata layer, used to track data across the nodes from index time to and through optional purge.

- Caching of query results by one-minute and one-hour time periods to support sub-second response to operational queries and alerts across tens of Terabytes of available data.

- Full support of compression for file storage to provide both storage and I/O read efficiency.

- Rate-limiting of ad-hoc, un-cached queries to guard system usability against large new queries that require scanning massive on-disk data sets for the first time.

Effective real-time network visibility is a never-ending and ever-growing challenge that has outpaced the rate of innovation by traditional network management vendors and products. Kentik has pioneered a clustered big data approach that leapfrogs the scalability, flexibility, and cost-effectiveness barriers that have long limited legacy approaches.

Using any of the standard big data distributions (such as Hadoop, ELK, and BigQuery) can lead to partial success against the needs for truly effective real-time J-Flow analysis, but typically at a TCO level that is unacceptably high for any organization lacking legions of experience programmers, coupled with unlimited systems resources.

More Reading

To learn more about Kentik’s approach to big data J-Flow analysis, see these blog posts:

Inside the Kentik Data Engine, Part 1

To learn more about Kentik’s SaaS Big Data J-Flow analysis, network performance monitoring and DDoS protection solution, visit the Kentik Detect product page.