When DNS Fails in the Cloud: DNS Performance and Troubleshooting

Summary

Cloud Solution Architect Ted Turner describes a process of migrating all of applications to the cloud, and gives key takeaways as to how building your observability platform to understand both the application state as well as underlying infrastructure is key to maintaining uptime for customers.

A while back I was working for a large scale SaaS platform. We were in the process of migrating all of our applications into the cloud. Our application stack used tremendous amounts of east-west traffic to help various application services understand state.

To facilitate the migration of our applications we split DNS into three tiers:

- Data center-based resolution from the cloud over VPN, to DC-hosted DNS resolvers

- Cloud-based DNS for our partners and customers (SaaS DNS)

- Cloud-based DNS for resolution within our cloud service provider (PaaS DNS)

Cloud providers will rate-limit your resources to ensure their ability to provide service for all of their customers. Based on rate limiting, the decision to use a SaaS-based external service made sense, as it allowed some security logging and scaled to our performance needs, without the rate limits.

Architect your DNS to account for any DNS related outages—CSP based, SaaS-hosted based, internally hosted—while improving network observability.

What we learned after our outage is that our SaaS DNS was provisioned from our cloud service provider across a dedicated BGP private peering link. The private peering was shared across two routers per side.

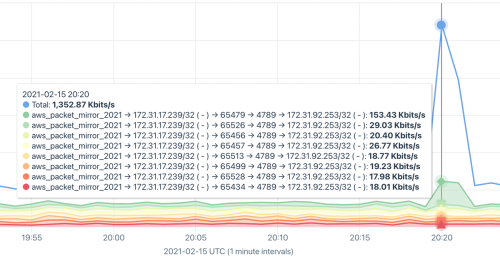

The problem unfolded where our APM suite showed massive outages of our application stack. With east-west traffic patterns, we needed to look at both our data center, as well as our cloud resources. Our APM suites showed our calls between our applications were timing out. Of note, none of our partner-based services was showing any degradation or time-outs. Knowing that our CSP partner-based services were working well, we focused our efforts on two of our application stack legs: DC-related infrastructure and SaaS-hosted infrastructure.

We opened up technical tickets with both our cloud service provider as well as our SaaS-hosted provider. We started investigating whether this was a cloud service provider issue (it was), SAAS partner or a data center related issue.

About 20 minutes after our application stack impact alarms sounded, we noted resources were starting to come back online.

As we were troubleshooting with our cloud service provider technical team, the cloud service provider identified that there was a single router failure in the CSP, which affected all traffic routing towards our SaaS provider for DNS. The fix was an automated re-provisioning of the router connected to the dedicated private BGP peer. As soon as the dead/dying router was removed from service, the previously impacted DNS SaaS provider traffic started flowing as expected. When DNS traffic normalized, the application stacks could start executing recovery operations for each component of the application stack. Teams with more mature applications were able to automatically stabilize. Teams with leaner or less mature applications were required to help the software by hand.

While the CSP fixed the issue in about 20 minutes, we still had a gaping hole in our application stack for stability. Every time our CSP or SaaS provider experienced any performance or stability issues, our application stack would also be impacted. We needed to figure out where our dependency was built into our application stack (DNS), and figure out a method to allow for fast healing. The end goal is to take care of our customers and avoid impacts.

The router on the CSP side died, causing a failed forwarding path. During the post mortem we identified that 50% of our traffic headed to our SaaS DNS provider was lost upon leaving our cloud service provider. The failed router was in the state of dying, but had not died enough to stop BGP adjacency.

The CSP management plane identified a failing condition in the router. At about the 20-minute mark of impact to the application stack, the dying router was removed from production, providing immediate relief. The CSP management plane automatically deployed a new router with associated BGP peering.

At this point we started an internal review of the application stack build and identified a single point of failure in our configuration of the OS. Each of our DNS configuration settings was uniquely built, tuned and configured for a very specific slice of our application stack needs.

The APM suite is great for instrumented code, but had a hard time identifying that DNS and router path was impacting the application stack. Application stack calls, which time out, have a large variety of causes. Building your observability platform to understand both the application state as well as underlying infrastructure is key to building and maintaining uptime for customers.

Upon review of our OS configurations, we decided to implement a few changes:

- Allow for caching of DNS resolved queries (DNSMasq, Unbound)

- Allow for DNS queries to be resolved by two providers

- SaaS DNS provider

- CSP DNS provider

- Remove Java negative ttl

More Take Aways

- APM suites are key to understanding the health of microservices and service mesh implementations

- Monitor the health of your CSP, SaaS providers, and all paths connecting these resources

- DNS flows should be 1:1 for day to day traffic (i.e., 50% loss in DNS “return” traffic killed the application stack)

- Reduction in DNS flow return to any of your DNS servers may indicate failure in routing paths