Cloudflare's DNS Downtime: Why BGP Hijacks Were Never to Blame

Summary

On July 14, Cloudflare’s popular public DNS service (known as 1.1.1.1) suffered an outage lasting over two hours. As rumors swirled about the cause, we were the first to push back on the theory that a BGP hijack had caused the outage. In fact, the hijack was actually a consequence. How did we know this so early when other internet watchers did not? We’ll discuss in this post.

Pardon the tardiness of this analysis. I was heading out on vacation with my family when this incident occurred, so I didn’t have time to write up some thoughts on this until now. But I’d like to discuss why it was immediately evident to me (and apparently not others) that this outage was not caused by a BGP hijack as was initially suspected.

Cloudflare DNS outage

On July 14, Cloudflare’s public DNS service experienced a global outage. Immediately, the technical community began searching for clues to explain the outage, and an initial theory took hold — a BGP hijack was the culprit! After all, the internet is held together by chewing gum and string, right?

Two monitoring vendors and at least one social media ”security expert” came out blaming the Cloudflare DNS outage on the hijack, which, in the end, turned out not to be the cause (the vendors have since published corrections). When I initially looked into the outage, I immediately ruled out a BGP hijack as the cause of the outage and did my level best to set everyone straight. To me, the BGP hijack was clearly a consequence of the outage, not what caused it.

Why was it so immediately clear to me and not others? Let’s take a look at my process.

The value of plotting reachability

Cloudflare’s public DNS Resolver is commonly known for its easy-to-remember “quad-one” IP address — 1.1.1.1. But this service relies on more than just 1.1.1.0/24. From the beginning, it also uses 1.0.0.0/24 and 2606:4700:4700::/48 for IPv6 (and according to their after-action report, several others that I didn’t know about).

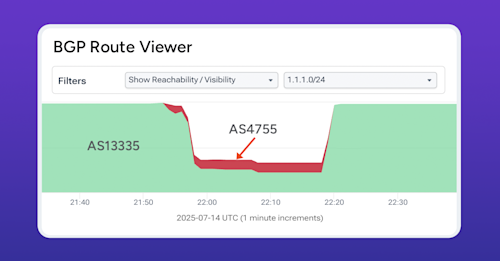

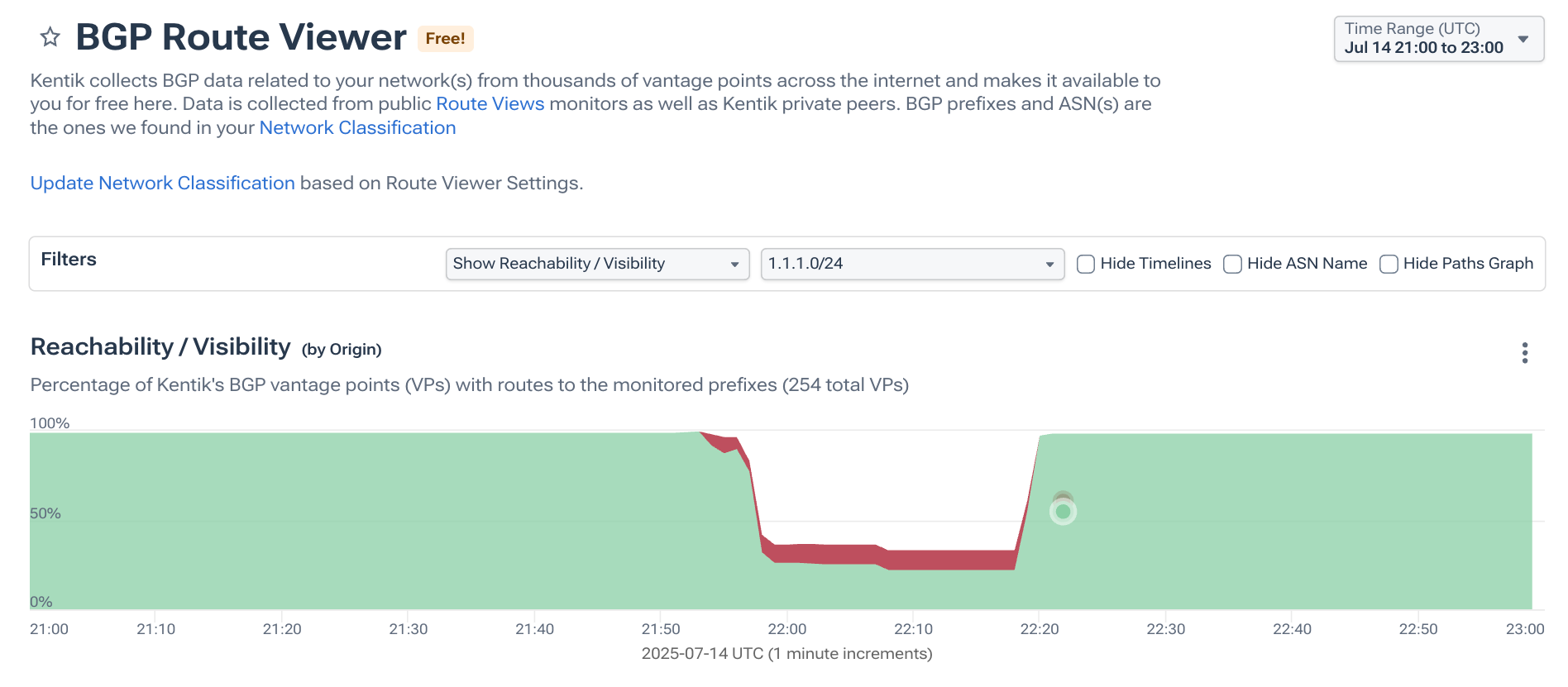

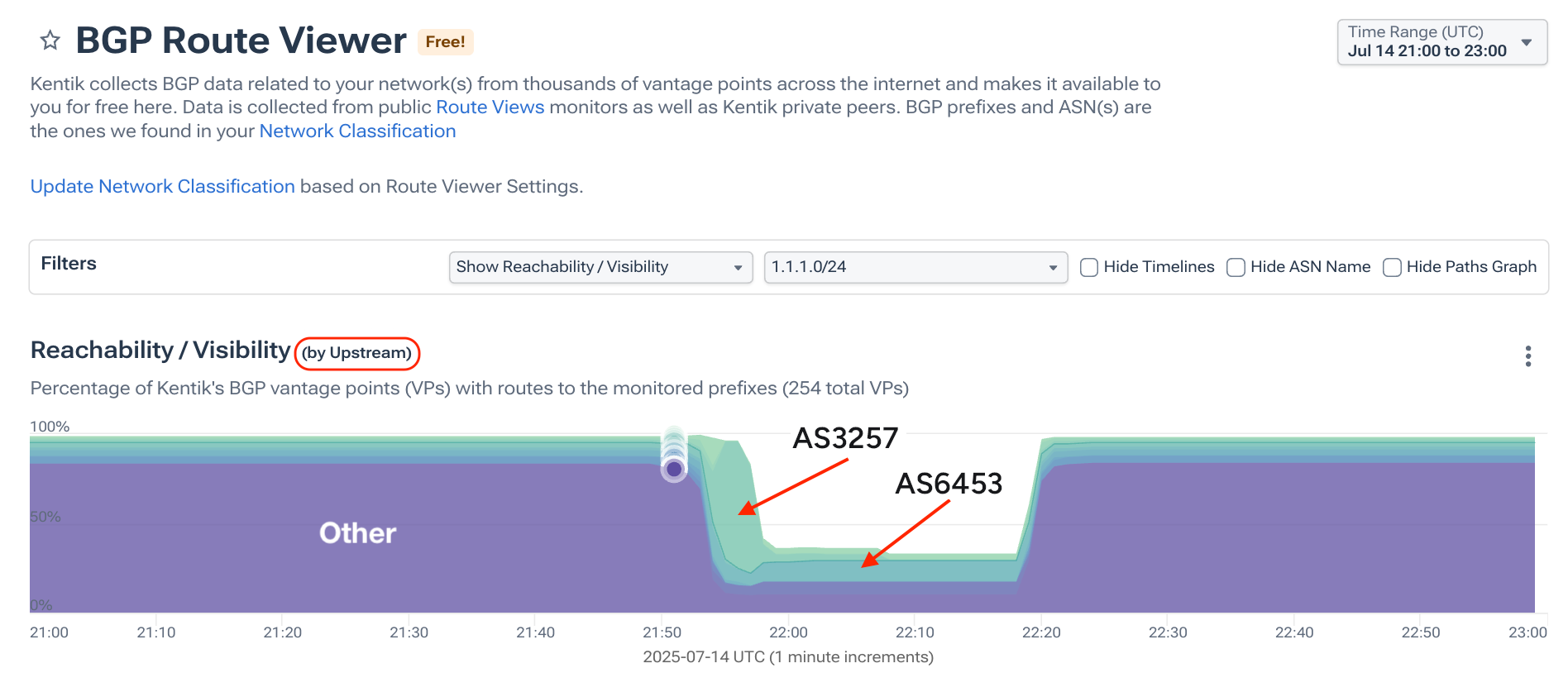

As someone who does a lot of BGP analysis, I first checked to see what was happening with those prefixes during the outage. When I pulled up 1.1.1.0/24 in Kentik’s BGP Route Viewer, I could see the picture below. There was another origin for the route (marked in red because it was RPKI-invalid), but the primary phenomenon was that the propagation of the route had dropped dramatically. Cloudflare had withdrawn this route, and that was a bigger issue than the unexpected origin that didn’t propagate very far.

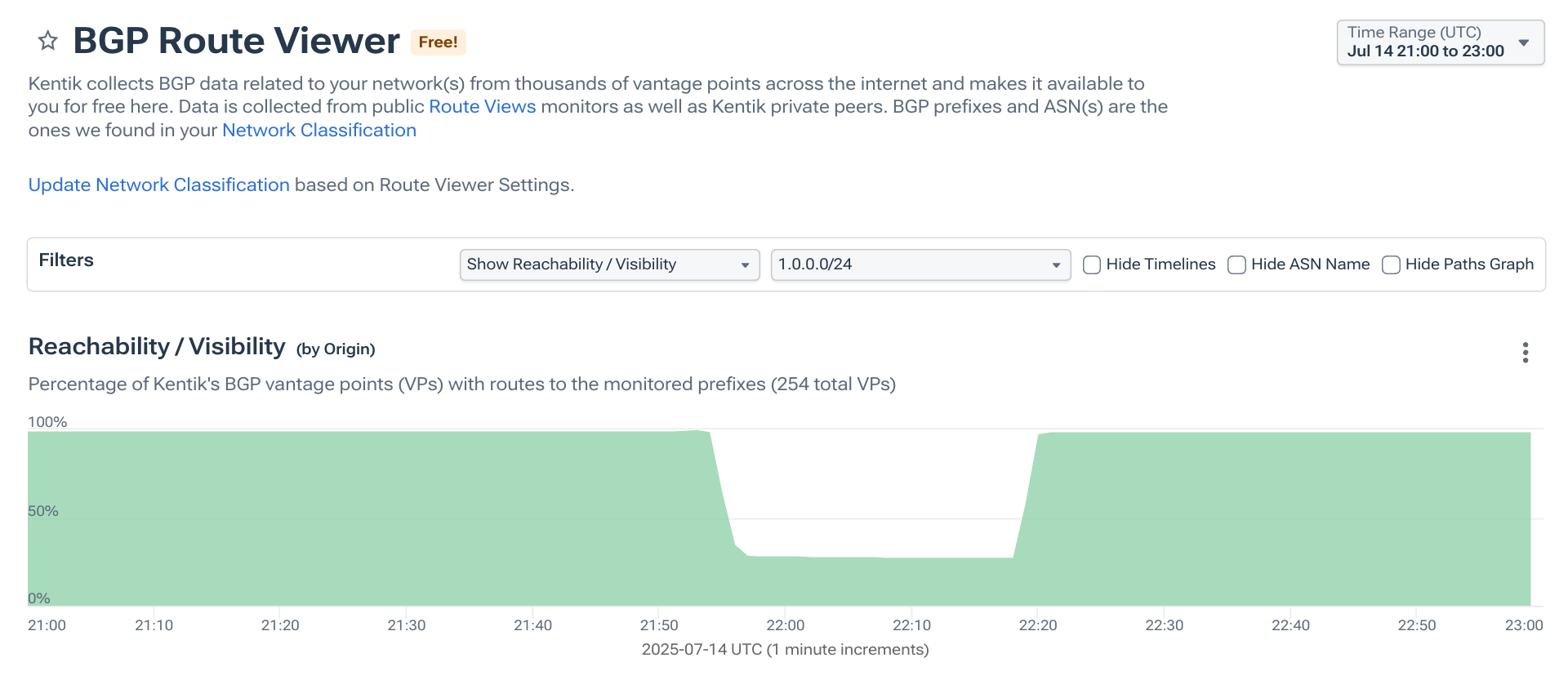

Checking on 1.0.0.0/24 (pictured below) showed that this route had also been (almost completely) withdrawn. Notably, there was no hijack this time.

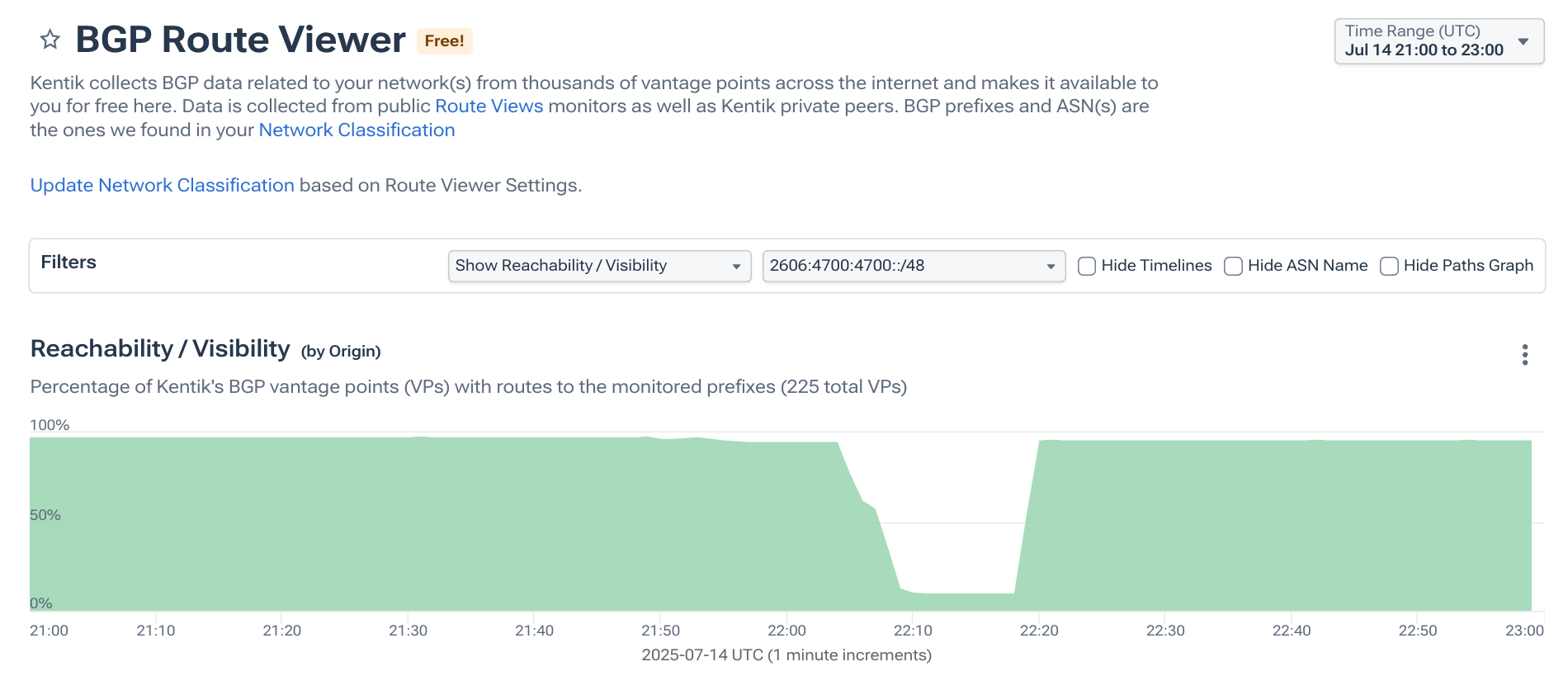

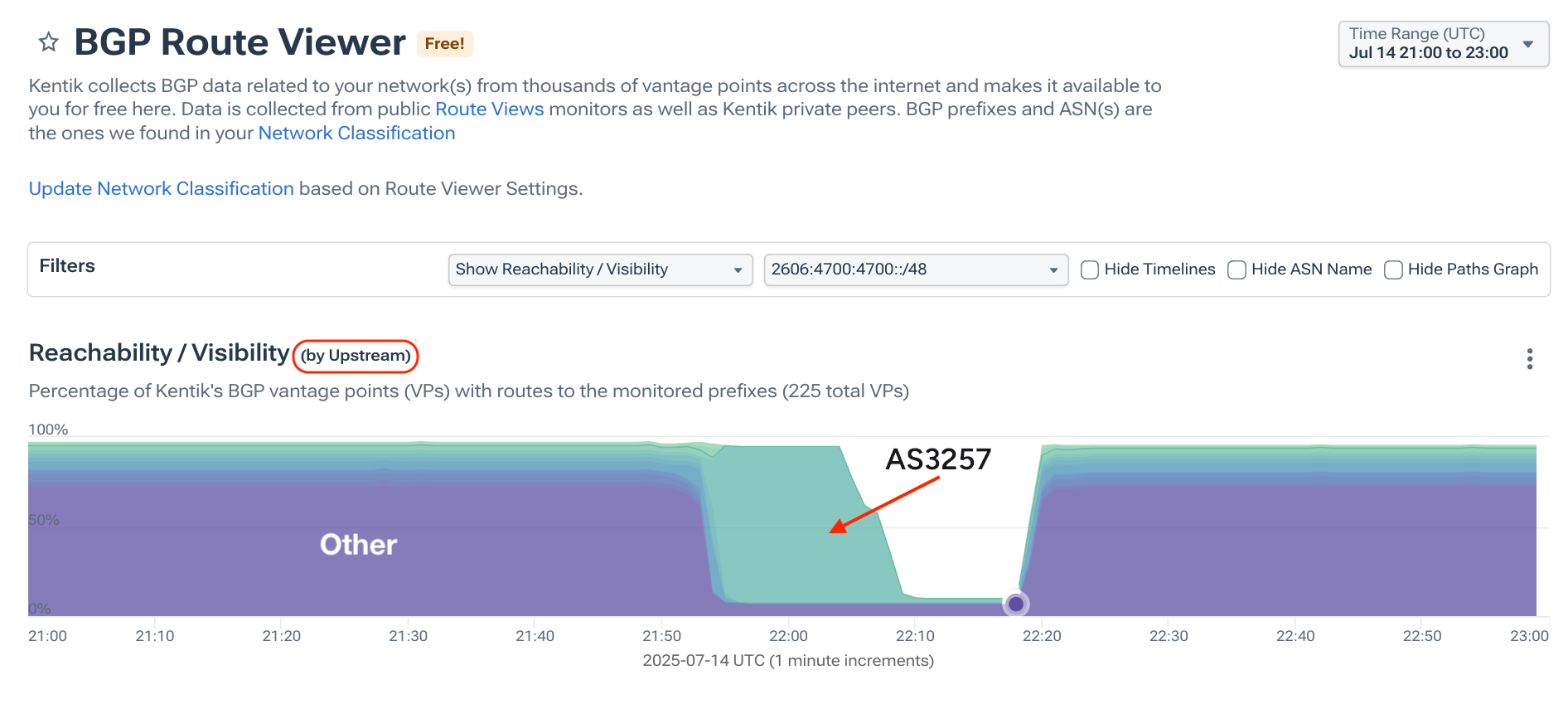

Lastly, I checked the IPv6 route, pictured below, and saw that it too had been (almost completely) withdrawn without experiencing a hijack, although the timing of the withdrawal was a little different (we’ll discuss that in a bit).

So the conclusion I could immediately make from these views was that AS13335 withdrew these routes — something AS4755 announcing 1.1.1.0/24 could not possibly have caused. The withdrawal of these routes was what was causing the global outage.

The past is prologue

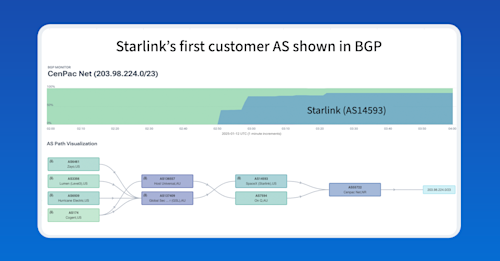

It is important to recall this history of 1.1.1.0/24. Prior to Cloudflare attaining this range for its DNS purposes, 1.1.1.0/24 had a quasi-reserved status. It was technically routable, but hadn’t been. Many network admins around the world used it for internal numbering, assuming it would never actually be routed. It was also commonly used in networking textbooks as an example IP range for labs and demos.

In fact, when Cloudflare began announcing this route back in 2018, they attracted (and probably still do) a river of internet traffic garbage from misconfigured networks around the world trying to send traffic to this address range. Perhaps they don’t get enough credit for filtering out that mess in order to provide this service.

Regardless, for the same reasons, 1.1.1.0/24 is also a commonly hijacked range. Not because people are necessarily trying to take down Cloudflare’s quad-one service, but because their internal routing has leaked out onto the internet. Because of Cloudflare’s massive peering base, these hijacks typically get overwhelmed by the routes of the enormous content provider.

That is, until that content provider withdraws its own routes, providing space for those mistakes to propagate. As I stated during the outage, the hijack was, in fact, a consequence of the outage, rather than the cause.

An effective BGP configuration is pivotal to controlling your organization’s destiny on the internet. Learn the basics and evolution of BGP.

RPKI ROV impact

Why didn’t RPKI ROV help? Well, it did somewhat. 1.1.1.0/24 (and the others) have ROAs which assert AS13335 as the rightful origin. ROV did limit the propagation of the route from AS4755, but it was powerless to address an outage that, in the end, was caused by an internal configuration error. AS4755’s route achieved the circulation that it did because its transit provider, sister network AS6453, didn’t reject its RPKI-invalid route. That’s a problem.

We saw something similar during the Orange España outage at the beginning of last year. In that instance, a hacker managed to intentionally render many of AS12479’s routes RPKI-invalid, greatly limiting their propagation. One network that wasn’t rejecting those invalid routes was its transit provider, sister network AS5511.

The lesson here is that just because another AS is a sister network, you should still be rejecting RPKI-invalid routes that come from it.

Unusual BGP behavior

Now, let’s circle back to the differences between the shapes of the propagations of the routes above. This section is really for the nerdiest BGP analysis nerds; everyone else can stop reading now. 😀

One unique feature of Kentik’s BGP Route Viewer is the ability to pivot the view to get a sense for what penultimate networks (upstreams) are used to reach the origin for that route during that period of time. We also have the traditional ball-n-stick visualization, but that fails to convey certain phenomenon.

Below is a screenshot of that view. Each “upstream” is rendered as a separate data series in the stackplot unless the number of sources is below 1%, in which case they are tossed into the “Other” data series. Since Cloudflare has a very large peering base, most BGP sources have their own unique adjacencies with AS13335, fall below the threshold, and appear namelessly in the Other bucket, in purple below.

That purple Other begins to drop away at 21:53 UTC when the outage began and AS13335 began withdrawing the route. However, the internet maintains 1.1.1.0/24 in its table for another five minutes because AS3257 continues announcing the route (with AS13335 as the origin) until finally releasing it. We can also see AS6453 as the upstream of the hijacked route originated by AS4755.

In fact, we see the same phenomenon happen with the IPv6 route, shown below. The purple Other drops away at 21:53 UTC when AS13335 withdraws its routes, but AS3257 continues telling the internet that it has a path to AS13335 for another 15 minutes before finally withdrawing the route.

1.0.0.0/24 is the only one of the three routes discussed above that did not experience this phenomenon, and, as a result, its graphic above most closely matches the timeline of the outage. I’ve observed this phenomenon in the past, and it usually means there is a faulty router somewhere in AS3257’s network that isn’t withdrawing routes when it should.

Conclusion

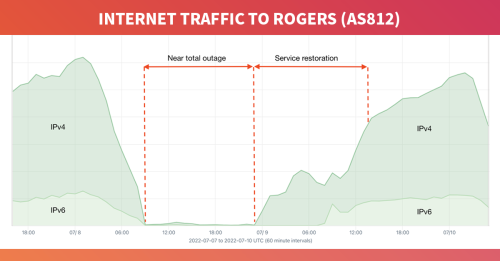

In my write-up of the historic Rogers outage from July 2022, in which I corrected an earlier assessment that the outage was caused by reachability issues, I concluded by defending BGP itself:

I’m here to say that BGP gets a bad rap during big outages. It’s an important protocol that governs the movement of traffic through the internet. It’s also one that every internet measurement analyst observes and analyzes. When there’s a big outage, we can often see the impacts in BGP data, but often these are the symptoms, not the cause. *If you mistakenly tell your routers to withdraw your BGP routes, and they comply, that’s not BGP’s fault.*

According to the post-mortem, a configuration error led Cloudflare’s automation software to mistakenly withdraw the routes associated with the quad-one DNS service. BGP, and RPKI ROV for that matter, worked as designed, but these technologies can’t save you from self-inflicted configuration errors.