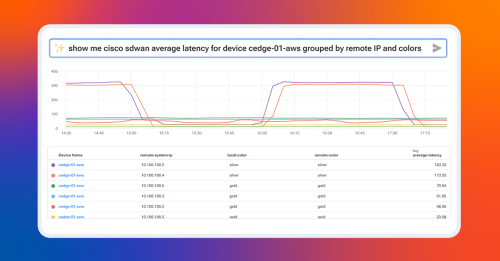

Hi, everyone. Thank you for joining this webinar, "AI-Assisted Network Monitoring: Real or Hype?" In collaboration between Capacity Media and Kentik. My name's Jack Allen. I'm a reporter with capacity media, and I'll be hosting the webinar. And I'd like to introduce Chris O'Brien, senior principal product manager at Kentik and Chris's colleague Nina Bargisen, who's director technical evangelism at Kentik. Chris Nina, thanks so much for joining us. So During the webinar, Chris and Nina are going to discuss how lean teams tackle the chaos of network monitoring while keeping costs low. And ensuring high quality digital experiences. Can AI meaningfully contribute to this challenge, or is it just hype? During the webinar, you will be able to learn how AI and machine learning have been used in network observability historically. How to use large language models to accelerate troubleshooting today. And then we'll move on, to how to collect and analyze data from diverse sources what Kentik's newly launched, software and service, NMS adds to the picture and the benefits of streaming telemetry with a live demonstration. We'll then have a fifteen minute q and a at the end. So please send your questions throughout the webinar, using this text box below the video. And all of the questions that you ask today will be anonymous. In the unlikely event that you experience any technical difficulties, please, just refresh your window and this should fix it. So, yeah, that's, that's kind of all of the housekeeping for me. So I will hand over to Chris and Nina. Thank you, Jack. So, Chris, I've been wondering because we were exactly discussing Is this really hype? Cause I'm getting all of these emails into my email into my e email box these days about ai ops and AI there's an AI that, and AI now it apparently is called AI ops, like DevOps, yes, but now it's AI ops. And I what the fuck's going on, Chris? Do you do you know this? I'm really confused. Because I'm mostly using AI to sort of help me write. Yeah. Yeah. I mean, there has been a lot of, quick progress on the large language model with stuff like chat GPT, and I think that's drawing more attention to this. You know, we've been trying to apply. We, I mean, the industry largely network observability have been trying to apply AI and ML technologies to the network observability space for at least fifteen years maybe longer. And and certainly, a lot of the fundamentals in machine learning have been around for several decades. So you know, there's a big shift in attention though right now. And so if we think about, like, what's actually true, It also seems like, every company in the world is is talking about their new AI thing, because everyone's experimenting in this space and trying to build something used for useful for folks. You know, if we I've been working on building network monitoring systems and other network observability tools for about a decade. And I remember, early on, we have we've sort of, a couple of iterations of how we've applied AI and ML to the space, that I've seen anyway. The first one may be the most boring one, but also probably the most useful one is calculating baselines. So this is, like, you know, the challenge that folks have is they have all of these different finite resources, RAM, CPU, interface utilization, and they have thousands, tens of thousands hundreds of thousands of these finite resources, and they wanna get an alert if there's a problem they're running out of a resource. Well, all of those resources are behaving differently. Right? And so some you may set a threshold of ninety percent, but memory goes over ninety percent, and it's fine. Some may run, at fifty percent normally. And if it goes to seventy five percent, that's an alert worthy event. And so, you know, what automatic baselines do for you is rather than trying to manually set thousands, tens of thousands, hundreds of thousands of thresholds, which simply wouldn't work. You can use, machine learning to calculate what is normal for that value. And normal can include both, you know, what is normal on average for all of time, but more likely will include normal for this time of day. ADM is compared to five PM as compared to midnight toward time of week on a Monday versus a Sunday, or this time of year, even some of these models will look at. And that uses a, machine learning, algorithm called linear regression which may be one of the most boring ones, but I know that one. We used that two years ago when I was working in a network planning department, and we were just looking at the peaks of traffic, and we would estimate growth. And then we would use that growth to sort of predict when we, when did we need to build for the next year and, and, and plan the budget from that, that was sort of straightforward when We did not think of that as AI. Yeah. Yeah. Yeah. So there's thresholding. There's also sort of capacity run out that linear regression is often used for, and it's it is technically machine learning, but it is so so boring and so often used. We don't really think about it as such, but it is the most successful application of, machine learning to date, at least in long term. The the other one that folks have been playing with and and sort of getting really excited about this machine learning for, like, alert aggregation and suppression. Everyone has this problem of too many alerts. What's actionable when I get when a hundred alerts go off at the same time? Which ones do I need to look at or which ones are sort of closest to the root of the problem that I need to troubleshoot. And, a number of companies have worked on that. I think Moogsoft, most famously worked on that, accepting data from all sorts of different monitoring and observability platforms and then pairing that data and trying to come to conclusions about, how to fit the alerts together. You know, I think that holds a lot of promise and I think some folks were successful with Mooksoft, but, man, that's a tough deal. Right? Because if you miss one critical alarm, you're in trouble. Yeah. No. I know. And I think I heard about this because, and what I heard about this is that it's actually really, really hard problem, and you need so much data so that your models can learn what is important and not that Yeah. Now I thought it was only the hyperscalers who actually had enough data that they could, they could train their models to be useful. So is that, is that, is that rightly perceived, do you think? Yeah. I mean, I think that's more right than wrong. It's not like, no one is doing that specific use case, but And I think particularly as we get into, folks will try and extend that use case. If I can see all my alarms and I can see all my data and I can start start to pick out which alarm is closest to the root of of the problem? Can I do root cause analysis? Like, root cause analysis is almost the holy grail of network observability, everyone dreams of it. No one has it. And as far as I've seen, no one is even close. And, you know, it was funny because, maybe three, four years ago as, AI and ML was first starting to sort of take the industry by storm in terms of messaging and hype if not actual practical value. I was working on building in MS, and, I was constantly being asked by my boss, what we're doing about this, the marketing department, what we're doing about this, analyst every analyst I spoke to asked, what are you doing for AI and ML? And we, had some brilliant architects on it, and we would brainstorm and try different things, but the end of the day, we're trying to make something valuable, and we haven't found the use case for that yet. And so, you know, after, you know, interestingly, the folks that were asking me the least about it were customers. But, maybe after the fifth or the sixth analyst asked me about I was in person with this this gentleman and, we had a good relationship with him. And I was like, Okay. So, you know, we're trying he asked me the question again, and I said, you know, we're trying to provide create something actually valuable here. And so we've got some effort where looking, or discovering, or prototyping, but we're not really finding something great, and we're not gonna release something and talk about something unless we have something of practical value. And I went a step further because I was getting frustrated. I was like, in fact, I have never seen a single instance of someone getting real value out of AI and ML for network observability in production. Have you? And I was flabbergasted that he said no. So this is a guy who's time job is to go and talk to vendors, go and talk to customers, go and talk to other analysts, talk to all of these people understand the industry. He's talking nearly nonstop about AINML and has never spoken to someone who is getting practical value in production. So I think that really speaks to, the degree of hype that was there four years ago and part of what's going on now again with you know, coming back into focus with all the improvements with, and breakthroughs with chat GPT and other LLMs. Yeah. So how is how is it different today? I mean, there there why is the hype starting? Because there must be there must be some value now. Right? Yeah. Well, when when the industry was working on it before, we didn't have chat GPT and and LLMs like it. I mean, I there has been a an incredible advancement there. And so the question is, does this become a a new tool that could solve problems we weren't able to solve before? But when how how how is that? Because, I mean, LMM, and it feels like you look at a lot of text, and then you can guess what comes up next and then you create text that way. That that's how it works right now. I mean, how how does it work in the context of of network observability? I'm not quite getting that. Yeah. I mean, there's a couple of people couple of companies trying different approaches. I think a lot of LLM does a content generation, as we've seen, fundamentally, LOLMs are about understanding language, input whether that is text or, speech, and then output, in the form of text. I guess, or more again, speech. So we've been, working at Kentik with LLMs for for a little while now and have come up with some pretty interesting, uses So I'll share my screen. I'll take we can take a look at something something real here. Okay. So we have this, feature called query assistance. And what this allows you to do is, you know, all the data we have out your from your, all those sort of, in a mess data that we have about your network, you can, write a question here with natural language and start to inspect that data. So, for example, I could say interfaces with highest bandwidth. And in the background, the large language model is translating that into the proper query form You see we have this query builder on the right hand side. You've always had access to this as a user. But there's a lot of fields here, and there's a lot of sort of ability to build complex, even the rejects, filters on all sorts of different things here. And, you know, everyone's collecting quite a bit of data nowadays. So you you have all of these different categories, then within each category, you have all sorts of different metrics. So To be able to just jump into a query, what the large language model do is doing precisely is it's translating this sort of natural text, how I would type a question into that query that is, the correct format for, can take in a mess. Right. So you don't have to sit and sift through all of the choices that you might have, and you have, like, I see hundreds of choices here. Yeah. Yeah. Yeah. Exactly. So So we did we asked, interfaces with highest bandwidth. This is actually it's showing me max, which I don't like. I would use average. So I'm gonna average here. Oh, you would use average. Right. Yeah. So being an old planner, I would use the ninety five percentile because, you know, you can't really be able to avoid that, if you do, in I mean, you can, but, depends on what kind of quality you wanna deliver for your, for your customers. Yeah. Yeah. That's fair. I guess I'm more of an enterprise engineer. So, at p ninety five, I've always heard p ninety five is really popular with service providers. I can just switch out to to p ninety five. It's the holy grail. It's the holy grail. The other thing I'm noticing is this is bit rate if we're if we're worried about sort of run out or something like that, we would want to be looking at utilization. Yeah. There's a lot of correlation you have to do in your head there to figure out whether you're in danger or not. Yeah. That's right. Okay. It looks like we got a couple interfaces who who are reporting in, the wrong capacity. We'll have to adjust those, but You can see we've pulled up the p ninety five percent in and out utilization. These are the top utilization across this test network that we have. And all of that's, you know, we've got the device and the interface name. So now I don't actually know what all of these inter well, I know what this one is, but I don't know what all of these interfaces are for. I I need descriptions for that. So maybe I wanna add here include interface descriptions. So a really easy way to sort of interact with your network and it's been it's been fun for us to observe, how users are using this. If, you know, I I would imagine most of our audience has tried chat and GPT at some time. And so knows that, you know, pretty quickly you start getting in get into this back and forth and start thinking about things a little bit differently and maybe ask questions that you wouldn't normally ask your in a mess. Maybe you wouldn't even normally ask your peer. And we've seen similar things as we're observing people using. It's it's a super interesting way to have, like, like, a more natural interaction with your with your network data. That's really cool. Yeah. So, yeah, so what is the data that we're looking at right now? Because, you know, we, Kensington has been mostly flow, but but this is this is different. This is our NMS. And have you what how does this come about? I mean, what would be done, getting to the point where we can do this? Yeah. Yeah. So there's a couple of things going on. This is this data set is part of our new, network monitoring system tool. It's in a mess. So we launched that at the end of January. So have a whole bunch of additional data, you know, traditional into mess type data that we can now correlate with our flow data and do this sort of this sort of fine grained explorative queries using the query builder or this, query assistant, over that same data, which is really why we get it got into it folks folks, our our users of, our flow data wanted to have that same sort of exploratory capability with their NMS data and be able correlate those two things. So, that's what we've, tried to do here with NMS and You know, I think one of the fundamentals is how we're handling it on the data CIDR, and that's a big part of how it makes it possible to to do this sort of LLM stuff, much less well, do the quarry builder much less the LLM So, maybe we can take a look at that architecture. I think that helps explain it for sure. Let me pull back a couple of slides here. So, basically, how NMS works is you deploy a a small software agent that does the data collection, we call that thing ranger. Ranger talks with Kentik SaaS to understand what devices it needs to go and collect data from. And then we have this thing called a collection profile. This is just a a little text plain text file where you control and configure how data is, what protocol is used for data collection, how that data is collected, and importantly how that data is normalized. And you can do that across a variety of different protocols. We currently have SNMP and string telemetry, which is super cool. Working, and then we're looking to we've really designed it to handle being kind of protocol agnostic, and we'd be working on API next here. But using this collection protocol profiles, you can normalize this traffic. So, you know, if you as you send it up to Kentik ingest, and we use a, pretty common influx line protocol here. As you send it up to Kintik ingest, then, then it's normalized into, open config inspired data model. Okay? And you can do that of course, with multiple collectors. And since we're using influx line protocol, you can actually accept data from genomeIC telegraph these other collectors that are really powerful collectors. And if you've got good data coming in, through them, you may as well use that as well. I'm just noticing some some little, disconnected lines and and some other oddities in the presentation software, but, any case. So as that data is put in Kentik ingest, it's stored in that normalized model. And that model is really what you saw on the right hand side when I was looking at measurements and looking at metrics. So at that point, It doesn't matter whether whether the data came from SNMP or streaming telemetry or, one of these collectors over here or anywhere else, really, all of that data is fed into, you know, metrics explorer where you do your query. It's fed into your dashboards, treat send into your alerts. So having to say that yeah. Go ahead. So you're actually saying that we are we are ingesting data, we're combining data from different, different tools like SNMP and streaming telemetry. Because I heard Yeah. I whenever I talk to people about this, they've always gone, but, you know, we can't do streaming telemetry because we have, like, only five devices. We're able to do it. So, you know, it's not really worth it, and and we have to look in different tools. So do we fix that, somehow? Yeah. I mean, streaming telemetry is a really interesting one because, there's so much sort of promise from the protocol, and there's so much excitement in small niche groups, but most devices don't support it. That's the practical reality is most of our devices don't support it. And as we're slowly upgrading our network, maybe five percent of our devices support it. And really, I think because of where, streaming telemetry came from, these really large hyperscalers that are telling the network vendors, hey, SNMP is not gonna cut it for the granularity. It's not gonna cut it for the accuracy that we want. And so you must support stringing telemetry and, you know, maybe providing some strong recommendations as to how to support it. All of that ended up having streaming telemetry really designed and built for a hyperscaler environment where, you know, you could contemplate really the idea was to do a hard cut over from SNMP to string telemetry, but that doesn't, like, you know, the number of service providers or even enterprises that works for where their gear supports it and they could literally turn off SMPP I mean, it's fast approaching zero. This is almost no one that can do that. So, you know, I think, what's left to be done with string telemetry is to make it approachable by normal normal networks. That is to say, not just the top five largest networks in the whole world. Yeah. And and and remind me again, please, what is the what is the strength of streaming telemetry? Because, you know, I mean, we we we lived with SMIP for so many years. So, you know, why is it worth it? Yeah. You you hear this, like, SNMP is dead phrase, which I love because it's, like, it speaks to the aspiration that we all know of the challenges with SNMP, but I think it might be before instead. Oh, you know, but Yeah. Yeah. But we're all running more SNMP than stringing telemetry by, like, fifty fold. So it's very aspirational. In the the there's a number of improvements with streaming telemetry, the first of which is fundamentally SNMP is a poll, architecture. So the SNMP speaker will, or management system will, send a request an SNMP gets and request a piece of data. So say, hey, I have a question, piece of network infrastructure. Hey, mister router, what's your interface bit counter out on this interface. Right? And the router will will receive that, realize it it needs to prepare a response and prepare that response and send that over. And so it it's interesting. You know, SNMP was invented in, like, nineteen eighty eight. Nowadays, we're all using SNMP and basically the same way, which is we do that once every five minutes or one minute or ten minute, and we draw draw a graph with it. Right? Because we need the graphs. That's, like, cactile or TG RLD tool. There's some long standing tools. Yeah. Yeah. And, so if you think about if that's the goal is to have this data constantly flowing, it makes zero sense to you know, do that all interrupt driven, like ask the router for each, ask the router that question over and over and over again every minute until the end of time. And so, how streaming telemetry changes that is streaming telemetry the management system subscribes. It says, hey, I want this data, this sort of data. Just send that to me as you have it. And that does, two things. It's lower overhead because you're not constantly sending the same question over and over. The the question is understood by the router. And the router also can schedule their own, data sending. Right? So it knows that this request is coming up, can fit it in in its work along with all the other stuff it's doing. You know, routers main job is not to talk to the in the monitoring or management system, it's to send traffic. Right? So we've always struggled with they don't have the largest CPUs and and and monitoring is not their priority. So anything we can do to make that overhead lower is better. So with streaming telemetry, you subscribe And then the router sends those data points at whatever interval that you've subscribed at, until the end of time or until update, whatever, whatever you specify. This makes it way more efficient for streaming telemetry than SNMP. And the net result of that is And you see a lot of people running, it, SNMP at, like, five minutes or sometimes one minute, sometimes ten minute polling intervals. Ten minutes is a long time. And, streaming telemetry because it's so much more efficient we can collect that data much more frequently without overloading the device. Okay. So it's it's, you know, collecting down at one minute even for your largest chassis makes sense, collecting at thirty seconds or ten seconds for a lot of data makes sense, for specialty data, like, you're like, hey, this metric, I wanna know right away when we pass some sort of threshold you can collect every two seconds. You can collect it sub second. So the the free yeah. The granularity is a whole different thing. That's a lot of data to you to ingest. Is that, is that even possible with the limitations on space and databases that we have? Yeah. Yeah. I think we've gotten a lot better, especially as we move this stuff to the cloud. That's the approach we've taken, certainly on the Kentik side is that this ingest, you know, in in a lot of ways, streaming telemetry is similar to flow data. It's just being applied to metrics and and these data sources that would traditionally be SNMP. So we've been collecting flow data for for a long time. It, can take flow data streams in Right? We get it second by second, and it is typically a a large amount of data, especially when you consider, you know, it's SaaS. So we collect all of our customers flow, at the same time. And so that's that's how we've been able to make sure that that scale we have that sort of scale port with this, string of telemetry, for NMS. Okay. Cool. I did wanna give a bit of a demonstration of streaming telemetry, maybe talk about one other other benefit, but what we're talking about So, like, that that more frequent data collection, there's there's two things from that. One is when you're analyzing a graph and hit three. Right? You have much better grainy larity, and that's a big deal. The other thing is when you're fighting a problem in real time, You can always go to the device and ask the device what's happening, but a lot of folks look at monitoring as, you know, it goes red in monitoring and it goes green in monitoring. Monitoring is the authority on whether my network is okay or not. So folks will, you know, make a change to attempt to solve a problem and watch it really closely, to see when when monitoring goes green. That phrase goes green. Right? And that makes gives me a calming feeling in my chest from all my time troubleshooting outages. You want want monitoring to go green. Right? Oh, yeah. I I remember doing maintenance and that we would just sit there and wait for the MTRDs graph to get updated. It's sort of like, this thing I just CIDR, did it work? Did it not work? Did it not work? Yeah. Yeah. Yeah. I mean, the most common polling interval I did some research, about this recently. The most common polling interval people are using for the NMS is still five minutes, which is pretty slow. Five minutes means, you know, you'll you will, often have to wait between five or ten minutes, one or two polling cycles to get a answer you can be confident about. And five to ten minutes is like an eternity in an outage We can take a look at what it looks like with a streaming telemetry here. So we've gone to this device. This is This is in our lab. This is running streaming telemetry as you see there. And we've got this chart showing the history. We see, inter this CPU went up. I've sort of created this problem of high CPU, on this device around, six thirty, six forty, And then on the right hand side, we have our high granularity view. And so this data is every two seconds. And this data is coming in live. So, right, seven thirty zero three, seven thirty zero five, and we're just pulling in new data points here. So imagine that I'm working an outage. I've said, Hey, I've seen this huge uptick in CPU. In fact, this may be a two core box. Where one of the cores is completely, utilized, and that's not good. So I'm I've come up with a hypothesis, jumped on the device, figure out what processes are using CPU and and come up with a hypothesis about why that CPU is high. And so I'm gonna implement a potential fix and then try and figure out if that worked or not. So I'll go in my terminal window over here and, implement that fix now. And we'll see how long it takes, for that data to make it all the way to the browser. I already see a decline here down to thirty nine percent. Down to two percent. So my fix did work. Right? And so and something that would have taken five or ten minutes I really used to feel, NMS would be, like, five or ten minutes delayed. Like, there's quite a gap between my NMS and my network infrastructure, but this, you know, with streaming telemetry, it can feel much more directly connected to your NMS. Yeah. That's that's that's pretty cool. I like that. And this is all from our our our NMS tool. Yeah. That's right. So because we've implemented that architecture, we normalized all of the data. We now have it available in metrics explore, which is our, you know, the query builder you saw earlier, where you can build your own query about all of that data. And all of the data, you know, then becomes accessible for the query assistant, and it doesn't matter whether it's coming in from string and telemetry or SNMP. And this is these are the things that we're trying to push, network monitoring network observability forward and and trying to think about, like, I think in twenty twenty four, waiting five or ten minutes to understand for your network monitoring system to catch up with your infrastructures crazy. Like, that's a unacceptable outage. So we need to figure out how to do better. And and these are some of the tools we're using. It can take to try and do that. Yeah. I know that we we we are working as well on, on flow and making it easier for us to do, ask questions about the network traffic. Combining it maybe, as well with, we state it from, from NMS. So we we wanna combine every kind of data we have. Yep. So I'm I I would like to show you, what we what we've been working on on that one is something we call and I have to do a screen share for us. Yeah. I can forward to you all I want on my own screen here, but, that's not gonna be helpful for anybody. So let me just, share my screen and, here we'll just jump into the Kantec tool And we have a preview in the tool for some selected customers these days for something that we call Journeys. And here, we, we have done the same thing that we've done in the metrics explorer, but we can hit any of the data versus that we have in the tool with, with this. So I'm gonna create a new journey here. You have to do that. There you go. I know in the query assistant side, we have to apply that to each one of our sort of query engines because each query form is different. The way you query metric data is a little bit different from the way you query flow data. Like, with flow, directionality is an essential part of, like, every query that you run, whereas with NMS data, directionality is is a much less common and important attribute to that. So, you know, I know, we've been playing around with applying query assistant to data explorer and other query engines, but it really interesting would be to compare all of these datasets together. Right? It is. So now we're going to I'm gonna show you how the Journeys work as well because Journeys is not just, like, security assistant. You had to go in and then you had to redo the thing. One thing that I really like about this is, like, say, I pretend that I'm I've I've coming into work in the morning, I wanna see what's going on in my network. So I started, like, one of the top source ASMs that was sending traffic And then, oh, I remember, wait. I, I only want to look at what's going over my internal, network boundary. So I'm just gonna add that part, to Liquuri, In the same way that, you know, I've been playing with ChatGBT. Uh-huh. Do you notice that with query assistant, I was having to, like, append because in query assistant, we're just taking whatever query you just typed out and it, that's what we run, but looks like this has some sense of history of the conversation. Yeah. It remembers the results, and then it adds the next query to that one. If you use keywords like add or remove or, you know, you wanna be to you wanna make sure that it understands you're talking about what you did before. So here, now I have my external, but still I see I see a lot of traffic coming in, but I'm not I don't know not I don't know enough yet. So I wanna know where does it come in and what kind of, what kind of interfaces, did it did it come in on TV? I need to spell here. T b t type. And who who was sending it to me? Like, who is the next tough neighbor? I can add all of this information to the, to the curie. And, so you see, first of all, now It's the language model, it's thinking about what does this even mean, and now it knows what it means, and now it's it's pulling out the data for me. And you can see I've added the dimensions that I wanted to look at. And we have the original curious going here. Oh, and That's not a very good visual. Right? So, we don't wanna. Let's have a visual that we can get some information out of. I love sankey diagrams. How about you? Yeah. Yeah. Anything where you have a source and destination, Sankey are great. Right? Yeah. So it comes up here because, I mean, it comes out in the table and, you know, I could I could do things about that one, but, you know, this is really tough. I see there's also view and data explorer. So you could carry this back into the full sort of manual query builder, if you want. Is that right? That's right. You could jump back into the into the usual tool that we have. And I I did that actually yesterday when I was preparing for this, and I got really frustrated because I had already gotten used to using natural language, and now I have to sit and and and work in the usual query, tool. And why is it taking so long? I'm sorry. Oh, I've seen that last query come through. Yeah. No. And you mentioned that it it's, like, translates the show as sankey to a quarry and then it runs the quarry, but we should be It looks like where it's getting stuck is translating it into with large language model into the query. It really does. That's a little bit annoying because that was not supposed to happen. Yeah. I mean, that's been my experience with What? Sorry? That's been my experience with chat GPT. It's like it can do so many amazing things, but it definitely stumbles at times for sure. And I it's hard for me predict when it will stumble too. Yeah. No. I agree. But it looks like now it's Now it finally understood what we wanted. And, Oh, it's running the query now. No. It's running the query. So now I already know that, exactly. We have a beautiful, same key here, and now we notice, oh, I really don't wanna look at all of that customer traffic because, I'm happy about that. They pay me I'm happy. So I'm just gonna remove all of that traffic connectivity. Customer. I I know that it does take, oh, that's interesting. We'll see if it understands. I have a typo here. Yeah. It'll be interesting to see what it does with this. Right? Because it's oh, yeah. Translate into some query. It did understand that, oh, I must have meant source connectivity type. Yeah. So because we we have a little ability here to see what filters are actually added to the to the traffic, to the to the cury. So I end up now, and now I have a great overview of my external boundary traffic. And I noticed, oh, I have Google here. I know that I have a I just negotiated that last, last year that I would have a, a direct viewing with Google. So why? Why is the traffic not flowing on that one? Why does that not show up on my, on my diagram And I can't really remember Google's ASN right now. So, I'm gonna take a chance. Show session state for neighbor with peer, AS, Google, ASN. Yeah. And the ASN is fifteen or one to a hundred and nine sixty nine. I I knew that. And this and the session was down. So this is here's the reason why the traffic was not running. Seem active. Yeah. Yeah. So a notice here, we jumped right over to the Metrack Explorer and we were looking into the LMS tool instead of looking at the flow data. Yeah. That protocols I was curious here as you were in that that protocol's BGP neighbors data is definitely a metrics explorer, data location. Oh, and view in Metrics Explorer. There it is. Yeah. Yeah. Yes. So how did it do all that? How did it translate it? Google ASN into PBS one five one six nine. It also found, I guess, the device that that neighborship was on PE thirty one. And then new Oh, I guess they shouldn't state. It's just active. Yeah? Yeah. So and and and this is Here, we've been training, training the language model on, on, on, on examples, actually, examples that you know, the team has been putting into the tool and and the tool. The tool is in his works right now. I will I wouldn't say that it's perfect yet, because it needs a lot of training, but imagine we will have all the training that we will get from ourselves and our customers, over time, can refine this into a tool that eventually will be super helpful. For doing analysis. And then, you know, the final thing, if I like this, I can save it, and then, you know, we can look at it some other day. Just run those queries again on another day. That's interesting. Yeah. I I mean, I think there is something here when it comes to applying LMs to network observability, you know, there's one thing that is really consistent in networking, particularly amongst service providers is the networks get larger and larger and larger and the expectations of downtime you expect less and less and less downtime But the team sizes are still tiny. You get these tiny teams running these giant complex networks. And so something that helps you do this analytics and maybe have have a little bit more natural interaction where you don't have to remember all of your field names, all of your all of your variables, all the different locations you have to click on. I mean, obviously, you have to remember a lot of that stuff in your job, but the less of that that you have to remember the better, and just like the speed of jumping into a quarry and iterating on it is super interesting to help help small teams sort of accelerate what they're doing. I agree totally, because, as I say, more and more important services. I remember when when we moved, like, voice and TV onto the IP network in one of my previous played many years ago. Man, that was, a completely different world that the, that the networking team was, was stepping into. And, And, you know, the roost cost analysis reports were not very fun to write at all. Yeah. I was enterprise CIDR. So We we we were slower. I think than you folks on the, like, voice over IP, but I remember the scary thing was always nine one one service. Right? Oh, yeah. Yeah. Because, you know, people used to that one. That one must work. Right? Yeah. Absolutely. Yeah. That's Alright. I mean, I just wanted to show this. And I think we're getting to the Q and A now. Right? We are indeed. So we've had we've had a few questions come through. Chris Nina, thanks for that. So yeah, as you correct. Correct. That's enough time for q and a. If you do have any other questions, guys, definitely feel free to use the chat box and you can add in some more while going through these first ones. So one I think might be quite a good start off for this section. It's quite a general question. What Oh, excuse me. We'll just jump around a little bit there. Where's my question gone. What would you say, makes Kentik's approach to AI different or unique. Yeah. I mean, we're we're playing with LOLMs. I think LOLMs So playing with LLMs is not unique, but playing with LLMs in the space of network observability, there's not a lot of folks doing that yet. We're on the cutting edge. Of applying that technology, I think many more will jump in the pool soon, and play with that. But I I would say that It's really about combining the breadth of data we have on the flow and now the NMS side in our scaled SaaS solution rather than something on prem SaaS just tends to be much better for, very large scale. And then applying, like, new complex queries to that very large scale dataset. So that's the approach that that we've taken in you know, we'll we'll see where that goes. But, to my knowledge, there's not another tool in the network observability space where you can do these sort of in-depth queries and iteration back and forth, on network data. Nate, or anything you'd add? No. Okay. Wonderful. Well, let's move on to our next question then, which is around kind of the training of the model, say, how are you ensure that the data training the journey is AI is reliable and accurate? Yeah. It's a tough question. You know, if you put garbage in, you're gonna get garbage out. So we we did sort of seed the system with a bunch of queries from our own engineering teams, that certainly helped get us going. And then as users use, they put in their own queries and they can You may have seen the, you know, thumbs up, thumbs down, which, basically starts using that data for training Now before we actually use that data to sort of rev a new learning, process and then create a new, results set of weights, and and essentially new model to then, share out via our application. We review all of those queries. So there's user input, but that is assessed by our team prior to, learning and then changing the model weights. Okay. And the following on from that, this is another question to come through. What were some of the challenges to ensure the query builder understands natural language inputs. Yeah. And, I think some of the some of the challenges are, in particular, in Journeys is to, hit the right data source afterwards. Right? So what what what are the keywords that we need to learn to, to make sure that we hit either the Metrc Explora or the Data Explorer or Yeah. Holy Molly. There this was a long question. I was just reading the fuller version of it. I think, you've answered the first bit and then it's the, maybe, just pasted twice. Sorry. Just ignore the second. Yeah. Yeah. Yeah. No problem. Yeah. I was just thinking through all of that. There's a couple of reference. Okay. So there's the maybe two biggest parts of the answer are, we did try multiple models GPT three and then three point five. We tried turbo, GPT four. We're using the the one that was most effective for us. We have trained a lot of data. And as we were doing that training, we're we also then would do sessions where new users would try it, and we would observe what was working and what wasn't. And after you've seen ten users spend half an hour typing in you can start to get a sense of, it's failing in this sort of, this type of question. So we need to do more training with this type of question. It's failing when it comes to directions. It's failing when it comes to referencing sites, which is data that the user adds. To their, NMS and their flow tool. And so we're like, oh, we need not just the data that we have, but also the data that folks like, label their devices as people wanna, use those label. So all of those sort of observing and figuring out what the big groups of questions that weren't working. That that was a big part of it for us. As far as keywords go, I would say by and large, we have avoided using keywords. There's, one specific scenario, which we haven't, which we most frequently use keywords, and that's, sort of determining whether the query is about NMS or about flow data. For flow data. Sometimes we get that wrong, but and so the model will attempt to do that automatically purely with machine learning But if it it's it wrong, you can put it in flow or traffic or, you know, one of these words, which will tell it for to for sure use the flow data or not I think that's most of that question. Yeah. I think you covered it. Yeah. I think so. So, yeah, thank you to, to whoever sent that in for a very, very thorough question. I've got a few more here that I'd like to run through and guys there is still about ten minutes. If you have any more, please feel free to send them through. But one question here is what polling intervals are supported. So, personally, there's wherever you wanna pick that one up. Yeah. In the NMS side, the default polling interval is one minute. I think that's the right place to be in twenty twenty four for a default. But, for streaming telemetry, data where it's are efficient. We'll do thirty seconds for most data points We do two seconds for CPU for, like, live data points that I showed you in the demo, and then we can go down to one per second for special use cases. Okay. Wonderful. And then another, slightly issue with a a simpler Is there an API? So API is something that we've done. We've historically really prioritized at Kentik, but we don't yet have it for NMS. That's something, you know, we released NMS for the very first time at the end of January. We are actively building the API. So we're coding on that right now, but we don't yet have it. Okay. And the last one that I can see, and again, a reminder are any more questions, definitely do send them through. Is this SAS or on prem? Yeah. This is where SAS first. So, No. That we have been so for the last ten years since the founding of the company. We accept Flow on SAS, and this NMS is also SaaS first we do have a handful of very large customers who require on prem and sometimes, we can make that work if the scale is sufficient. But we're predominantly SaaS. Okay. Brilliant. Well, I'm not seeing any more questions come through. So I will, follow-up on a thank you slide. And, also, yeah, thank, Chris Adiana for joining us today and providing some some great insights. For everyone watching, whether live or in the month, please don't hesitate to reach out to either of them if you are interested in finding out some more information. And, yeah, thank you everyone for joining and for watching. We hope this has been useful, and finally, last, but for me, don't forget to sign up and subscribe to pasty Media. We've got a bunch of, more webinars and, like this coming up over the the rest of the year and some really, really interesting content on AI and, and network. Operations as well. So, yeah, thanks very much for joining guys, and hopefully we'll see everyone again very soon.

Kentik networking experts Chris O’Brien and Nina Bargisen join Capacity Media’s Jack Allen to explore the evolving role of AI in network monitoring. They explore historical applications of AI and machine learning in network intelligence, the integration of large language models for enhanced troubleshooting, and the significance of diverse network telemetry data sources.

The session includes a live demonstration of the AI-assisted query features of Kentik NMS (Network Monitoring System) and highlightings the advantages of streaming telemetry over SNMP. There’s also an insightful Q&A session at the end. This webinar replay is a must-watch for anyone interested in the intersection of AI technology and network management.

Learn more about Kentik NMS, the next-generation network monitoring system here and the Top 10 Use Cases for Network Intelligence.

Key takeaways from this webinar include:

- AI and machine learning have been explored in network observability for over 15 years, aiming to enhance monitoring efficiency and accuracy.

- Large Language Models (LLMs) like ChatGPT offer new possibilities for troubleshooting and interacting with network data through natural language processing.

- Streaming telemetry is highlighted for its efficiency and granularity in data collection, compared to traditional SNMP methods.

- Kentik’s NMS and Query Assistant AI features demonstrate practical applications of AI in network management, emphasizing real-time data analysis and comprehensive network visibility.

- Challenges in applying AI to network intelligence include ensuring data accuracy, model training, and integrating AI insights into actionable strategies.

Platform

Solutions

- Reduce Cloud Spend

- Migrate To and From Any Cloud

- Improve Cloud Performance

- Optimize Enterprise WAN

- Network Performance Monitoring

- Deliver Exceptional Digital Experiences

- Detect and Mitigate DDoS

- Harden Network Policy Management

- Investigate Security Incidents

- Visualize All Cloud and Network Traffic

- Troubleshoot Any Network

- Understand Internet Performance

- Optimize Data Center Networks

- Consolidate Legacy Tools

- Optimize Peering and Transit

- Plan Network Capacity

- Reduce Network Spend

- Grow Subscriber Revenue