NetFlow Collector

Understanding the inner workings of a network can be a complex task. An essential tool in the arsenal of any network administrator is the NetFlow Collector. These powerful applications are vital in managing and analyzing network flow data and can help ensure optimal network performance and capacity planning. This article delves into what a NetFlow Collector is, its main functions, limitations, and modern approaches to its implementation.

What is a NetFlow Collector?

A NetFlow Collector is a network application that receives, processes, and stores NetFlow records. These records are data about network flow exported from network devices such as routers and switches. Developed by Cisco Systems, NetFlow is a protocol that tracks critical statistics and information about IP packet flows.

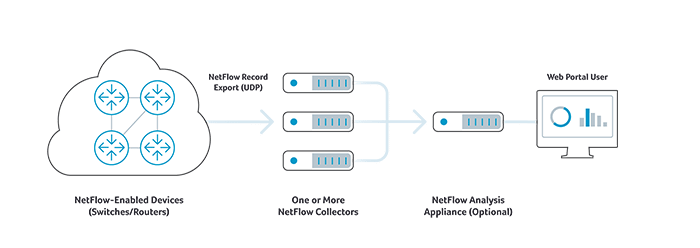

A NetFlow Collector is part of a three-component system used for NetFlow analysis that includes a NetFlow Exporter and a NetFlow Analyzer. While the NetFlow Exporter tracks and generates flow records, the NetFlow Collector receives and organizes these records. The NetFlow Analyzer then uses this data for network performance monitoring, troubleshooting, and capacity planning tasks.

The basic functions of these components are described below:

- NetFlow Exporter: a NetFlow-enabled router, switch, probe or host software agent that tracks key statistics and other information about IP packet flows and generates flow records that are encapsulated in UDP and sent to a flow collector. In cloud environments, NetFlow-like data can be exported in the form of VPC Flow Logs (also known as cloud flow logs).

- NetFlow Collector: an application responsible for receiving flow record packets, ingesting the data from the flow records, pre-processing and storing flow record from one or more flow exporters.

- NetFlow Analyzer: a software application that provides tabular, graphical and other tools and visualizations to enable network operators and engineers to analyze flow data for various use cases, including network performance monitoring, troubleshooting, and capacity planning.

Kentik in brief: Kentik provides a cloud-scale NetFlow collector and analyzer as part of the Kentik Network Intelligence Platform. Kentik ingests NetFlow and other flow formats at scale, including IPFIX, sFlow, and J-Flow, and supports cloud flow logs, then makes the data immediately usable for troubleshooting, capacity planning, and traffic analytics. This “big data” approach replaces fixed-capacity collector appliances with a horizontally scalable system that supports higher ingest volume, longer retention, deeper analytics, and faster query-driven investigations.

Learn how AI-powered insights help you predict issues, optimize performance, reduce costs, and enhance security.

Main Functions of NetFlow Collectors

A NetFlow Collector’s main functions include:

- Ingesting flow UDP datagrams from one or more NetFlow-enabled devices

- Unpacking binary flow data into text/numeric formats

- Performing data volume reduction through selective filtering and aggregation

- Storing resulting data in flat files or SQL database

- Synchronizing flow data to the NetFlow Analyzer application running on a separate computing resource

NetFlow Collector and Netflow Analyzer applications are two functions of a NetFlow analysis system or product. In some cases, the NetFlow analysis product implements both functions on the same server. This is appropriate when the volume of flow data being generated by exporters is relatively low and localized.

In cases where flow data generation is high, or where sources are geographically dispersed, the collector function can be run on separate and geographically-distributed servers (such as rackmount server appliances). In these cases, collectors then synchronize their data to a centralized analyzer server.

Limitations of Physical NetFlow Collectors

Historically, the most common way to run NetFlow collectors was on a physical, rackmounted Intel-based server running a Linux OS variant. More recently, flow collectors have been deployed on virtual machines. Unfortunately, in either case, compute and storage severely limits the amount of detailed network flow data that could be retained and analyzed.

Cloud-scale NetFlow Collectors

Most recently, a unified, cloud-scale approach to NetFlow collector and analyzer architectures has emerged. In this architecture, a horizontally-scalable, big data system replaces physical or virtual collector and analyzer appliances. Big data systems allow for dramatically higher volumes of data ingest, longer data retention periods, deeper network traffic analytics and more powerful anomaly detection. Kentik is an example of this cloud-scale collector/analyzer architecture. To learn more about modern approaches to NetFlow analysis, visit the Kentik Platform overview page.

FAQs about NetFlow Collectors

What are the three components of a NetFlow analysis system?

A typical NetFlow system includes a NetFlow exporter (generates flow records), a NetFlow collector (receives and stores them), and a NetFlow analyzer (turns them into tables, charts, and insights for troubleshooting and planning). Kentik delivers a cloud-scale approach that combines collection + analysis workflows in one platform: Kentik Network Intelligence Platform.

What does a NetFlow collector do?

A NetFlow collector ingests flow UDP datagrams, unpacks binary flow data into usable formats, may reduce volume via filtering/aggregation, stores results (often in flat files or a SQL database), and synchronizes data to an analyzer. Kentik’s approach replaces per-server storage constraints with a scalable data engine designed for high-volume flow ingest and fast analytics: Kentik Platform.

What’s the difference between a NetFlow collector and a NetFlow analyzer?

The collector’s job is to receive and organize flow records; the analyzer’s job is to provide visualizations and tools to use that data for performance monitoring, troubleshooting, and capacity planning. Kentik provides the collector + analysis experience in a unified, cloud-scale platform so teams can go from ingest to investigation quickly: Kentik Platform.

When should you run the collector and analyzer on separate systems?

When flow volume is relatively low and localized, the collector and analyzer functions can run on the same server. When flow generation is high or sources are geographically dispersed, collectors are often distributed and then synchronized to a centralized analyzer. Kentik’s cloud-scale architecture is designed for high-volume, multi-site environments without managing distributed collector appliances: Big Data NetFlow Collectors.

How do NetFlow collectors reduce data volume?

Many collectors perform volume reduction through selective filtering and aggregation so storage and compute don’t become bottlenecks. Kentik’s big-data approach is built to preserve and analyze flow telemetry at scale, reducing the need to throw away detail early: Big Data NetFlow Collectors.

What are the limitations of physical (or VM-based) NetFlow collectors?

Physical and VM-based collectors are constrained by fixed compute and storage, which limits how much detailed flow data can be retained and analyzed. Kentik’s cloud-scale model replaces fixed-capacity appliances with a horizontally scalable system designed for higher ingest and longer retention: NetFlow Collector and Kentik Platform.

What is a cloud-scale NetFlow collector?

A cloud-scale NetFlow collector uses a horizontally scalable “big data” architecture rather than a single appliance, enabling higher ingest volume, longer retention, deeper analytics, and stronger anomaly detection. Kentik uses this model to support operational use cases that require both scale and fast time-to-query: Big Data NetFlow Collectors and Kentik Platform.

Is there a NetFlow equivalent in public cloud environments?

Yes. In cloud environments, NetFlow-like data is commonly exported as cloud flow logs (for example, VPC Flow Logs). Kentik ingests cloud flow logs alongside traditional flow protocols so teams can analyze on-prem and cloud traffic in one workflow: Kentik Platform.

What should you look for in a NetFlow collector if you need real-time operations and fast investigations?

For operational use (not just planning reports), you want high ingest scale plus fast query response across large datasets so engineers can investigate incidents quickly. Kentik’s clustered data engine is built for high-volume ingest and fast querying with retained raw data for accurate troubleshooting and forensics: Kentik Platform.

Where can I learn more about NetFlow analysis and tools?

Start with a NetFlow overview and then explore flow analysis components, variants, and use cases. Helpful references include:

Kentik’s platform overview shows how Kentik applies these concepts at cloud scale: Kentik Platform.

Releated Reading and Resources

- Kentipedia: NetFlow Overview

- NetFlow Guide: Types of Network Flow Analysis

- Kentipedia: NetFlow Tools

- Looking for the best NetFlow collector? Try Kentik today… it’s free to get started today.