Transforming Human Interaction with Data Using Large Language Models and Generative AI

Summary

AI has been on a decades-long journey to revolutionize technology by emulating human intelligence in computers. Recently, AI has extended its influence to areas of practical use with natural language processing and large language models. Today, LLMs enable a natural, simplified, and enhanced way for people to interact with data, the lifeblood of our modern world. In this extensive post, learn the history of LLMs, how they operate, and how they facilitate interaction with information.

Since its inception, artificial intelligence has been on an iterative journey to revolutionize technology by emulating human intelligence in computers. Once thought about only by academics in university computer science departments, AI has extended its influence to various areas of practical use, one of the most visible today, arguably being natural language processing (NLP) and large language models (LLMs).

Natural language processing is a field focused on the interaction between computers and humans using natural human language. NLP enables a very natural and, by many accounts, enhanced human interaction with data, allowing for insights beyond human analytical capacity. It also facilitates faster, more efficient data analysis, especially considering the enormous size of the datasets we use in various fields today.

Large language models, or LLMs, are a subset of advanced NLP models capable of understanding and generating text coherently and contextually relevant. They represent a probabilistic model of natural language, similar to how texting applications predict the next word a human may input when typing. However, LLMs are much more sophisticated, generating a series of words, sentences, and entire paragraphs based on training from a vast body of text data.

AI, LLMs, and network monitoring

Generative AI and LLMs have become useful in a variety of applications including in aerospace, medical research, engineering, manufacturing, computer science, and so on. IT operations and network monitoring in particular have benefited from generative AI’s democratization of information. Most recently, LLMs have provided a new service layer between engineers building and monitoring networks and the vast amount of telemetry data they produce.

Built on a foundation of NLP, LLMs have even gone so far as to enable the democratization of information among anyone with access to a computer.

The origin and evolution of large language models

NLP is currently a major focus in the evolution of AI. NLP research concerns how computers can understand, interpret, and generate human language, making it a helpful bridge between computers, or more accurately, the data they contain and actual humans. The power and excitement around NLP is based on its ability to enable computers to comprehend the nuances of human language, therefore fostering a more intuitive and effective human-computer interaction.

Early development of natural language processing

The origins of NLP can be traced back to the late 1940s after World War II, with the advent of the very first computers. These early attempts in computer-human communication included machine translation, which involved the understanding of natural language instructions. Technically speaking, humans would input commands that both humans and computers could understand; therefore, it is a very early form of a natural language interface.

By the 1950s, experiments were done with language translation, such as the IBM-Georgetown Exhibition of 1954, in which computers automatically performed a crude translation of over 60 Russian sentences into English. At that time, much of the research around translation centered on a dictionary-based lookup. Therefore, work during this period focused on morphology, semantics, and syntax. As sophisticated as this may sound, no higher level programming languages existed at this time, so much of the actual code was written in assembly language on very slow computers that were available only to a small number of academics and industry researchers.

Beginning around the early to mid-1960s, a shift toward research in interpretation and generation occurred, dividing researchers into distinct areas of NLP research.

The first, symbolic NLP, also known as “deterministic,” is a rule-based method for generating syntactically correct formal language. It was favored by many computer scientists with a linguistics background. The idea of symbolic NLP is to train a machine to learn a language as humans do, through rules of grammar, syntax, etc.

Another area, known as stochastic NLP, focuses on statistical and probabilistic methods of NLP. This meant focusing more on word and text pattern recognition within and among texts and the ability to recognize actual text characters visually.

In the 1970s, we began to see research that resembled how we view artificial intelligence today. Rather than simply recognizing word patterns or probabilistic word generation, researchers experimented with constructing and manipulating abstract and meaningful text. This involved inference knowledge and eventually led to model training, both of which are significant components of modern AI broadly, especially NLP and LLMs.

This phase, sometimes called the grammatico-logical phase, was a move toward using logic for reasoning and knowledge representation. This led to computational linguistics and computational theory of language, most often attributed first to Noam Chomsky, and which can be traced back to symbolic NLP in that computational linguistics is concerned with the rules of grammar and syntax. By understanding these rules, the idea is that a computer can understand and potentially even generate knowledge and, in the context of NLP, better deal with a user’s beliefs and intentions.

Discourse modeling is yet another area that emerged during this time. Discourse modeling looks at the exchanges between humans and computers. It attempts to process communication concepts such as the need to change “you” in a speaker’s question to “me” in the computer’s answer.

Through the 1980s and 1990s, research in NLP focused much more on probabilistic models and experimentation in accuracy. By 1993, probabilistic and statistical methods of handling natural language processing were the most common models in use. In the early 2000s, NLP research became much more focused on information extraction and text generation due mainly to the vast amounts of information available on the internet.

This was also when statistical language processing became a major focus. Statistical language processing is most concerned with providing actual valuable results. This has led to familiar uses such as information extraction and text summarization. Also, in the early 2000s, statistical language processing led to what many call language processing. Though some of the first and most compelling practical uses for NLP were spell checking and grammar applications and translation tools such as Google translate, statistical language processing led to the rise of early chatbots, predictive sentence completion such as in texting applications, and so on.

In the 2010s and up to today, research has focused on enhancing the ability to discern a human’s tone and intent and reducing the number of parameters and hyperparameters needed to train an LLM model, both of which will be discussed in a later section.

This represents two goals: first, a push to make the interaction with a human more natural and accurate, and second, the reduction of both the financial cost and time needed to train LLM models.

Models used by LLMs

Remember that modern LLMs employ a probabilistic model of natural language. A probabilistic model is a mathematical framework used to represent and analyze the probability of different outcomes in a process. This mathematical framework is essential for generating logical, context-aware, natural language text that captures the variety and even the uncertainty that exists in everyday human language.

N-gram language models

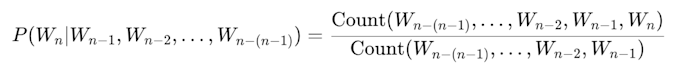

An n-gram model is a probabilistic model used to predict the next item in a sequence, such as a word in a sentence. The term “n-gram” refers to a sequence of n items (such as words), and the model is built on the idea that the probability of a word in a text can be approximated by the context provided by the preceding words. Therefore, the n-gram model is used in language models to assign probabilities to sentences and sequences of words.

In the context of predicting the probability of the next word in a sentence based on a body of training data (text), the n-gram formula is relatively straightforward.

Given a sequence of words, the probability of a word *Wn* appearing after a sequence of n - 1 words can be estimated using the formula below.

In this formula, on the left we’re representing the conditional property of the word Wn occurring given the preceding n - 1 words. On the right we divide the count of the specific n-gram sequence in the training dataset by the count of the preceding n - 1 words. This becomes more complex as the value of n increases, mostly because the model needs to account for longer sequences of preceding words.

The above formula represents the chain rule, which many researchers consider impractical because it’s unable to effectively keep track of all possible word histories. The n-gram model isn’t typically used anymore in modern Large Language Models, but it’s essential to understand this model as the foundation of how LLMs evolved.

What is an n-gram?

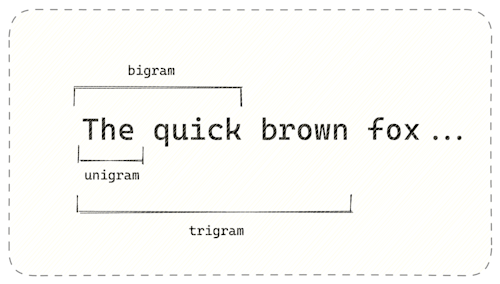

An n-gram is a sequence of n consecutive items (typically words) in a text, with n being a number.

For instance, take a look at the sentences below:

The n-gram model estimates the probability of each word based on the occurrence of its preceding n - 1 words. This is called probability estimation. In the example above, for the bigram model, the probability of the word “fox” following “brown” is calculated by looking at how often “brown fox” appears in the training data.

The model operates under the Markov assumption, which suggests that predicting the next item in the sequence depends only on the previous n - 1 items and not on any earlier items. This makes the computational problem more manageable and limits the context that the model can consider.

N-gram models are used to create statistical language models that can predict the likelihood of a sequence of words. This is fundamental in applications like speech recognition, text prediction, and machine translation. Because they can generate text by predicting the next word given a sequence of words and by analyzing the probabilities of entire word sequences, n-gram models can also suggest corrections in text.

Using tokenization to build an n-gram model

To build an n-gram model, we first collect a large body of text data. This text is then broken down into tokens (usually words). Ultimately, this process, called tokenization, splits text into individual manageable units of data the LLM can digest. Though these tokens are typically words in most NLP applications, they can also be characters, subwords, or phrases.

Take the image below, for example.

In this example, word-level tokenization splits the text into individual worlds.

Word level tokenization:

We can also break the text down to individual characters:

Thus, the main goal of tokenization is to convert a text (like a sentence or a document) into a list of tokens that can be easily analyzed or processed by a computer. Once the text is tokenized into words, these tokens are used to form n-grams. For example, in a bigram model, pairs of consecutive tokens are formed; in a trigram model, triples of consecutive tokens are created, and so on.

Tokenization also involves decisions about handling special cases like punctuation, numbers, and special characters. For example, should “don’t” be treated as one token or split into “do” and “n’t”?

Embeddings are the actual representations of these characters, words, and phrases, as vectors in a continuous, high-dimensional space. During model training and subsequent tokenization, the optimal vector representation of each word is determined to understand how they relate to each other. This is one mechanism used by the model to determine what words logically go together or what words are more likely to follow from another word or phrase.

In the image below from a blog post by Vaclav Kosar, you can see how the sentence “This is a input text.” is tokenized into words and embeddings passed into the model.

Challenges and limitations of n-gram models

The main challenge with n-gram models is data sparsity. As n increases, the model requires a larger dataset to capture the probabilities of n-grams accurately. This can lead to a contextual limitation in which n-grams provide a limited window of context (only n-1 words). This often isn’t enough to fully understand the meaning of a sentence, especially for larger values of n.

Therefore, due to their limitations, n-gram models have been largely superseded by more advanced models like neural networks and transformers, which can better capture longer-range dependencies and contextual nuances.

A shift to neural network models

From traditional NLP to neural approaches

In recent years, the industry has shifted away from using statistical language models toward using neural network models.

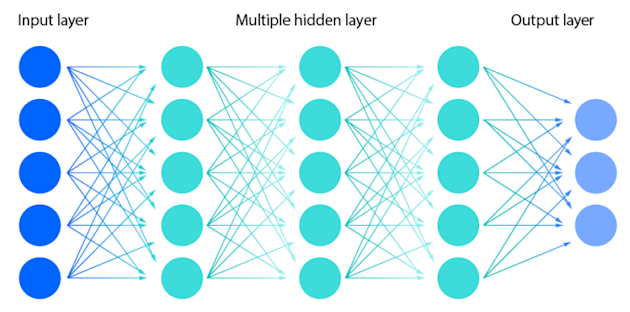

Neural network models are computational models inspired by the human brain. They consist of layers of interconnected nodes, or “neurons,” each of which performs simple computations. Though popular culture may conflate neural networks with how the actual human brain works, they are quite different. Neural networks can certainly produce sophisticated outputs, but they do not yet approach the complexity of how a human mind functions.

In a neural network, the initial input layer receives input data, followed by hidden layers, which perform computations through “neurons” that are interconnected. The complexity of the network often depends on the number of hidden layers and the number of neurons in these layers. Finally, the output layer produces the final output of the network.

In the image below, you can see the visual representation of the input layers, hidden layers, and the output layers, each with neurons performing individual computations within the model.

How neural networks function

After data is fed into the input layer, each neuron in the hidden layers processes the data, often using non-linear functions, to capture complex patterns. Each individual neuron, or node, can be looked at as its own linear regression model, composed of input data, weights, a threshold, and its output.

The result is then passed to the next layer for the subsequent computation, then to the next layer, and so on, until the result reaches the output layer, which outputs some value. This could be a classification, a prediction, etc.

Normally, this is done in only one direction from the input layer, through successive hidden layers, and then the output layer in a feed-forward design. However, there is some backward activity called backpropagation. Backpropagation is used to fine-tune the model’s parameters during the training process.

Neural networks learn from data in the sense that if an end result is inaccurate in some way, the model can make adjustments autonomously to reach more accurate results. Backpropagation weighs the accuracy of the final result from the output layer and, based on the result’s accuracy, will use optimization algorithms like gradient descent to “go back” through the layers to adjust the model parameters to improve its accuracy and performance.

In the image below we can see how backpropagation will use the results from the output layer to go backward in the model to make its adjustments.

This process can be done many times during training until the model produces sufficiently accurate results and is considered “fit for service.”

Parameters and hyperparameters are discussed in greater detail below.

Neural networks in NLP

In natural language processing, neural networks are used to create word embeddings (like Word2Vec or GloVe), where words are represented as dense vectors in a continuous vector space. This helps capture semantic relationships between words.

Notice in the diagram below where we can see the relationships between and among words in a given dataset. In this visualization from chapter 6 of Jurafsky and Martin’s book, Speech and Language Processing (still in progress), we see a two-dimensional projection of embeddings for some words and phrases, showing that words with similar meanings are nearby in space.

Several neural networks have been used in recent years, each of which either improves on another or serves a different purpose.

Recurrent neural networks (RNNs) are designed to handle data sequences, making them suitable for text. They process text sequentially, maintaining an internal state that encodes information about the sequence processed so far. They’re used in tasks like language modeling, text generation, and speech recognition but struggle with long-range dependencies due to vanishing gradient problems.

Long short-term memory (LSTM) networks are a type of RNN and are particularly effective in capturing long-range dependencies in text, which is crucial for understanding context and meaning. LSTMs include a memory cell and gates to regulate the flow of information, addressing the vanishing gradient problem of standard RNNs. LSTMs are widely used in sequence prediction, language modeling, and machine translation.

Convolutional neural networks (CNNs) are primarily known for image processing, but CNNs are also used in NLP to identify patterns in sequences of words or characters.

Gated recurrent units (GRUs) streamline RNNs and the architecture of LSTMs for greater efficiency. They merge the forget and input gates of LSTMs into a single mechanism and, similar to LSTMs, are used in various sequence processing tasks.

Transformers, like those used in BERT or GPT, have revolutionized NLP and significantly improved NLP tasks’ performance over earlier models. Transformers rely on attention mechanisms, allowing the model to focus on different parts of the input sequence when producing each part of the output, making them highly effective for various complex tasks.

Applications in NLP

Neural networks, especially transformers, are used for generating logical and contextually relevant text. They have significantly improved the quality of machine translation as well as sentiment analysis, or in other words, analyzing and categorizing human opinions expressed in text. Neural networks are also used extensively to extract structured information from unstructured text, like named entity recognition.

Advantages and challenges

Neural networks can capture complex patterns in language and are highly effective in handling large-scale data, one significant advantage over n-gram models. When properly trained, they work well to generalize new, unseen data.

However, neural networks require substantial computational resources and often need large amounts of labeled data for training. This means they can sometimes be seen as “black boxes,” making it challenging to understand how they make specific decisions or predictions.

Nevertheless, their ability to learn from data and capture complex patterns makes them especially powerful for tasks involving human language. They are a significant step forward in the ability to process and understand natural language, providing the backbone for many modern LLMs.

Transformers

A transformer is an advanced neural network architecture introduced in the 2017 paper “Attention Is All You Need” by Vaswani et al. This architecture is distinct from its predecessors due to its unique handling of sequential data, like text, and its reliance on the attention mechanism.

A transformer is a versatile mechanism that has been successfully applied to a broad range of NLP tasks, including language modeling, machine translation, text summarization, sentiment analysis, question answering, and more.

These models are typically complex and require significant computational resources, but their ability to understand and generate human language has made them the backbone of many advanced LLMs.

Architecture

Attention mechanism

The core innovation in transformers is the attention mechanism, which enables the model to focus dynamically on different parts of the input sequence when making predictions. This mechanism is crucial for understanding context and relationships within the text.

Attention allows the model to focus on the most relevant parts of the input for a given task, similar to how humans pay attention to specific aspects of what they see or hear. It assigns different levels of importance, or weights, to various parts of the input data. The model can then prioritize which parts to focus on based on these weights.

Transformers use a type of attention called self-attention. Self-attention allows each position in the input sequence to attend to all positions in the previous layer of the model simultaneously. It then calculates scores that determine how much focus to put on other parts of the input for each word in the sequence. This involves computing a set of queries, keys, and values from the input vectors, typically using linear transformations.

The attention scores are then used to create a weighted sum of the values, which becomes the output of the self-attention layer. The output is then used in subsequent layers of the transformer.

Attention allows transformers to understand the context of words in a sentence, capturing both local and long-range dependencies in text. Multi-head attention runs multiple attention mechanisms (heads) simultaneously. Each head looks at the input from a different perspective, focusing on different parts of the input. Using multiple heads allows the model to identify the various types of relationships in the data.

Many transformers use an encoder-decoder framework. The encoder processes the input (like text in one language), and the decoder generates the output (like translated text in another language). However, some models, like GPT, use only the decoder part for tasks like text generation.

Parallel processing

Unlike earlier sequential models (like RNNs and LSTMs), transformers process the entire input sequence at once, not one element at a time. Transformers allow for more efficient parallel processing and faster training. Parallel processing is most concerned with model performance and efficiency. Therefore, we run multiple tasks at once, such as tokenization, data processing, hyperparameter tuning, and so on, simultaneously to reduce the operational cost of training the model.

Parallel processing also allows for better handling of longer sequences, though attention mechanisms can be computationally intensive for especially long sequences. However, this can be addressed by using a layered structure.

Layered structure

A layered transformer model structure involves multiple stacked layers of self-attention and feed-forward networks, which contribute to the transformer’s ability to understand and generate language.

Each layer in a transformer can learn different aspects of the data, so as information passes through successive layers, the model can build increasingly complex representations. For example, in NLP, lower layers might capture basic syntactic structures, while higher layers can understand more abstract concepts like context, sentiment, or semantic relationships.

Each layer computes self-attention, allowing the model to refine its understanding of the relationships between words in a sentence. This results in rich contextual embeddings for words, where the entire sequence informs the representation of each word.

Finally, the abstraction achieved through multiple layers allows transformers to handle new data the model has never seen. It allows previously learned patterns and relationships to be applied more effectively to new and sometimes completely different contexts.

Below is a diagram of a transformer model architecture from Vaswani et al’s paper.

Notice how the Transformer follows this overall architecture using stacked self-attention and point-wise, fully connected layers for both the encoder (left) and decoder (right).

Training LLMs

Data preparation and preprocessing

First, before we can begin the data preparation process, we select a dataset. For LLMs, this involves choosing a large and diverse body of text relevant to the intended application. The dataset might include books, articles, websites, or, in the case of some popular LLMs, the public internet itself.

Next, the data must be cleaned and preprocessed, usually involving tokenization, which, as mentioned in the earlier section on tokenization, is the process of converting text into tokens, typically individual words. The data then goes through normalization (like lowercasing), removing unnecessary characters, and sometimes subword tokenization (breaking words into smaller units).

Model parameters

After this initial data preparation and preprocessing stage, we initialize the model parameters. Parameters are the various settings and configurations that define the architecture and behavior of the model. For modern LLMs, the number of parameters in a model can be in the 10s of billions, requiring significant computational power and resources.

In machine learning, parameters are the variables that allow the model to learn the rules from the data. Parameters are not generally entered manually. Instead, during model training, which includes backpropagation, the algorithm adjusts parameters, making parameters critical to how a model learns and makes predictions.

There is also a concept of parameter initialization, which provides the model with a small set of meaningful and relevant values at the outset of a run so that the model has a place to start. After each iteration, the algorithm can adjust those initial values based on the accuracy of each run.

Because there can be many parameters in use, a successful model will need to adjust discrete values and find an appropriate overall combination when adjusting parameters, for example, modifying one value may make the model more efficient but sacrifice accuracy.

Once the parameters’ best overall values and combinations are figured out, the model has completed its training and is considered ready for making predictions on new and unseen data.

Model parameters are crucial because they significantly influence the model’s performance, learning ability, and efficiency. Additionally, model parameters directly influence operational and financial costs, and considering there may be millions or billions of parameters that can be adjusted, this can be a computationally expensive process.

Hyperparameters

Hyperparameters differ from parameters in that they do not learn their values from the underlying data. Instead, hyperparameters are manually entered by the user and remain static during model training.

Hyperparameters are entered by a user (engineer) at the outset of training an LLM model. They are often best-guest at first and adjusted based on trial and error. This brute force method may sound sophisticated, but it is quite common, especially at the outset of model training.

Examples of hyperparameters are the learning rate used in neural networks, the number of branches in a decision tree, or the number of clusters in a clustering algorithm. These are specific values set before training, though they can be adjusted throughout training iterations.

Because hyperparameters are fixed, the values of the parameters (described in the section above) are influenced and ultimately constrained by the values of hyperparameters.

Parameter tuning

Tuning a model can be a balancing act of finding the optimal set of these parameters. Fine-tuning these parameters is crucial for optimizing the model’s performance and efficiency to achieve a particular task. It must be done in light of the nature of the input data and resource constraints.

For example, larger models with more parameters require more computational power and memory, and overly large models might “overfit” the training data and perform poorly on unseen data. Increasing the number of layers in a neural network may provide marginally better results but sacrifice time and financial cost to achieve.

Today, frameworks such as PyTourch and TensorFlow are used to abstract much of this activity to more easily build and train neural networks. Both machine learning libraries are open-sourced and very popular both in academia and production environments.

PyTourch, first released in 2016 by developers at Meta, integrates well with Python and is popular, particularly among researchers and academics. Today, PyTourch is governed by the PyTorch Foundation.

TensorFlow, first released in 2015 by the Google Brain Team, is often used in production environments due to its ability to scale well, flexible architecture, and integration with a variety of programming languages.

Challenges for transformer models

Transformer models, especially large ones, are computationally intensive and require substantial training resources, often necessitating powerful GPUs or TPUs. Additionally, they typically need large datasets for training to perform optimally, which can be a limitation in data-scarce scenarios.

Also, the complexity of these models can make them harder to interpret and understand compared to simpler models. Nevertheless, the transformer model’s ability to process data sequences in parallel, combined with the powerful attention mechanism, allows it to capture complex linguistic patterns and nuances, leading to significant advancements in various language-related tasks.

Challenges for LLMs

Hallucinations

A hallucination is when an LLM generates information or responses that are factually incorrect, nonsensical, biased, or not based on reality. The responses themselves may be grammatically correct and intelligible, so “hallucinations” in this context means incorrect or misleading information, not the collection of completely random letters and words.

Why LLMs hallucinate

Hallucinations occur due to the inherent limitations and characteristics of how LLMs generate text and, ultimately, how they are trained. They can be caused by limitations of the data set, such as the absence of recent data on a subject area. Sometimes, this has no bearing on the user’s question or prompt, but in cases in which it does, the LLM is limited to old data, resulting in an inaccurate response.

Data may also be limited because there is a general lack of information on a topic. For example, a brand-new technology will have very little literature on it to be added to an LLM’s data set. Therefore, the results, though presented with a confident tone, may be inaccurate or misleading.

Also, the LLM will generate inaccurate or biased results if the data set used to train a model is biased or lacks diversity. For example, suppose the data set used to train is based solely on writing from one group of scholars without considering writings from scholars with opposing views. In that case, the LLM will confidently produce results biased toward the data on which it was trained.

Another issue is model overfitting and underfitting, which is well understood in statistical analysis and machine learning. Overfitting the training data means the model is too specific for the data set on which it is trained. In this scenario, the model can operate accurately only on the particular data set. Underfitting occurs when the model is trained on such a broad data set, resulting in a general inability to operate on a specific data set or, in the case of an LLM, a corpus of data relating to a particular subject area.

Solving for hallucinations

Training data

Ensuring the LLM is trained on accurate and relevant data is the first step toward mitigating hallucinations.

First, it’s critical that the body of training data is sufficiently large and diverse so that there is enough information to process a query in the first place. This also has the effect of mitigating bias in the results of the model.

Also, data must be regularly validated so that false information can be identified before (and after) the model runs. This validation process is not trivial and requires filtering, data cleaning, data standardization and format normalization, and even manual checks, all of which contribute to ensuring the training data is accurate.

Next, test runs can be performed on a small sample of the data to test the accuracy of the model’s results. This process is iterative and can be repeated until the results are satisfactory. For each iteration, changes can be made to the model, the dataset itself, and other LLM components to increase accuracy.

Additionally, the data should be updated over time to reflect new and more current information. Updating the model with up-to-date data helps reduce the likelihood of it providing outdated, misleading, incorrect, or outright ridiculous information.

Retrieval-augmented generation

Currently, many in LLM research and industry have agreed upon RAG, or Retrieval-Augmented Generation, as a method to deal with hallucinations. RAG ensures that the LLM’s generated responses are based in real-world data. It does this by pulling information from reliable external sources or databases, decreasing the probability of the model generating nonsensical or incorrect facts.

RAG uses a cross-validation process to ensure factual accuracy and consistency, which means the training data can be verified with the information the model received. However, this presupposes that the external data RAG uses is also of high quality, accurate, and reliable.

Model training and adjusting parameters

As described in previous sections on neural networks and backpropagation, an LLM model can adjust its parameters based on the results’ accuracy in the training process. And though the number of parameters can be in the billions, adjusting parameters is still more productive than retraining the model altogether since only a small number of parameter changes could potentially have significant effects on the output.

Because the model doesn’t yet have any meaningful knowledge or the ability to generate relevant responses at the very beginning of training, parameters are initially set to random or best-guess values. Then, the model is exposed to a large dataset, which, would be a vast body of diverse text in the case of LLMs.

In supervised learning scenarios, this text comes with corresponding outputs that the model should ideally generate. During training, an input (such as a piece of text) is fed into the model, making a prediction or generating a response. This is called a forward pass.

The model’s output is then compared to the expected output (from the training dataset), and the difference is calculated using a loss function. This function quantifies how far the model’s prediction deviates from the desired output. The model uses this loss value to perform backpropagation, which, as explained in an earlier section, is a critical process in neural network training.

Remember that backpropagation involves using the results of the output layer to adjust parameters to achieve more accurate results. It does this by calculating the gradient of the loss function with respect to each parameter. These gradients are used to make the actual adjustments to the model’s parameters. Often, the model employs an optimization algorithm like Stochastic Gradient Descent (SGD) or its variants (like Adam) with the idea of adjusting each parameter in a direction that minimizes the loss.

This process of making predictions, calculating loss, and adjusting parameters is repeated over many iterations and epochs (an epoch is one complete pass through the entire training dataset) to, again, minimize loss and produce a more accurate result.

Temperature

Temperature is a parameter that influences the randomness, or what some would consider the creativity of the responses generated by the model. It is an essential component of how LLM models generate text.

When generating a text response, an LLM predicts the probability of each possible next word based on the given input and its training. This results in a probability distribution of the entire vocabulary at the LLM’s disposal. The model uses this to determine what word or sequence of words actually makes sense in a response to a prompt or input.

Temperature is a hyperparameter, or in other words, a static value, which the model uses to convert the raw output scores for each word into probabilities. It’s one method we can use to increase or decrease randomness, creativity, and diversity in a generated text. A high-temperature value (greater than 1) makes the model more likely to choose less probable words, making the responses less predictable and sometimes more nonsensical or irrelevant.

When the temperature is set to 1, the model follows the probability distribution as it is, without any adjustment. This is often considered a balanced choice, offering a mix of predictability and creativity, though in practice, many will default to a slightly lower value of 0.7, for example.

Adjusting the temperature can be useful for different applications. For creative tasks like storytelling or poetry generation, a higher temperature might be preferred to introduce novelty and variation. For more factual or informative tasks, a lower temperature can help maintain accuracy and relevance.

In the image below, see how the temperature setting in an LLM can be seen almost as a slider that can be adjusted based on the desired output.

This image is a good visualization of what occurs when we adjust the temperature hyperparameter. However, to achieve this change, we utilize the softmax function, which plays a critical role in how the temperature hyperparameter is actually applied in the LLM.

LLMs use the softmax function to convert the raw output scores from a model into probability values. For example, in the formula below, P(yi) is the probability of the i-th event, zi is the logit for the i-th event, and K is the total number of events.

To incorporate temperature into the function, we divide the logits by the (ezi) temperature value we select (T) before applying softmax. The new formula is:

It’s important to note that temperature doesn’t change the model’s knowledge or understanding; it merely influences the style of text generation by allowing more or fewer words to be considered. Individual words are assigned a probability score based on the above function, and as T increases, the output increases above 1, resulting in a more random selection of words. This also means that a model won’t produce more accurate information at a lower temperature; it will just express it more predictably.

In that sense, temperature, as used in an LLM, is a way to control the trade-off between randomness and predictability in the generated text.

Adjusting hyperparameters

Hyperparameters are manually adjusted, which is their main defining characteristic. Adjusting hyperparameters in an LLM significantly impacts its training process and the quality of its output.

Hyperparameters are the actual configuration settings used to structure the learning process and model architecture. They aren’t learned from the data but are set before the training process begins.

For example, the learning rate is the amount by which parameters are adjusted during each iteration, which an engineer controls manually. This is a crucial hyperparameter in training neural networks because, though a higher learning rate might speed up learning, it can also overshoot the optimal values. Conversely, while setting a low learning rate may prevent overshooting, it can also make training inefficiently slow.

The batch size refers to the number of training examples used in one iteration of model training. Manually selecting a larger batch size provides a more accurate estimate of the gradient but requires more memory and computational power. Smaller batch sizes can make the training process less stable but often lead to faster convergence.

Another important hyperparameter is the epoch, or the number of times the entire training dataset is passed forward and backward through the neural network. Too few epochs can lead to underfitting, while too many can lead to overfitting.

The manually configured model size is itself another crucial hyperparameter. Larger models with more layers and units can capture more complex patterns and have higher representational capacity. However, they also require more data and computational resources to train and are more prone to overfitting.

There are a variety of other hyperparameters as well such as optimizer, sequence length, weight initialization, and so on, each contributing to the model’s accuracy, efficiency, and ultimately the quality of the results the model generates.

Instruction tuning and prompt engineering

Instruction tuning is an approach to training LLMs that focuses on enhancing the model’s ability to follow and respond to natural language user-given instructions, commands, and queries. This process is distinct from general model training and designed to improve the model’s performance in task-oriented scenarios.

In essence, instruction tuning refines an LLM’s ability to understand and respond to a wide range of instructions, making the model more versatile and user-friendly for various applications.

Instruction tuning involves fine-tuning the model on a dataset consisting of examples where instructions are paired with appropriate responses. These datasets are crafted to include a variety of tasks and instructions, from simple information retrieval to complex problem-solving tasks. While general training of an LLM involves learning from a vast body of text to understand language and generate relevant responses, instruction tuning is more targeted. It teaches the model to recognize and prioritize the user’s intent as expressed in the instruction.

This specialized tuning makes the LLM more intuitive and effective in interacting with users. Users can give commands or ask questions in natural language, and the model is better equipped to understand and fulfill these specific requests.

Part of instruction tuning can involve showing the model specific examples of how to carry out various tasks. This could include not just the instruction but also the steps or reasoning needed to arrive at the correct response. Instruction tuning also involves a continuous learning approach, in which the model is periodically updated with new examples and instructions to keep its performance in line with user expectations and evolving language use.

Special attention is given in the instruction tuning process to train the model in handling sensitive topics or instructions that require ethical considerations. The aim is to guide the model towards safe and responsible responses.

User feedback

Like instruction tuning, incorporating user feedback into the model’s training cycle can help identify and correct hallucinations. This is often called RLHF, or reinforcement learning from human feedback. When actual human users point out inaccuracies, these instances can be used as learning opportunities for the model.

Usage

Prompt engineering

Prompt engineering is the process of a user carefully crafting their prompts to guide or influence the model’s responses more effectively. Ultimately, it’s finding the best way to phrase or structure a question or request to achieve the desired outcome, whether generating content, extracting information, solving a problem, or engaging in dialogue. Therefore, prompt engineering can significantly affect the accuracy and relevance of the output.

Prompt engineering involves creating specific input prompts for the LLM, have sufficient context, and are structured logically. Truly effective prompt engineering requires understanding how the model processes language and generates text. This helps formulate the actual prompts to align with the model’s training and language patterns.

It’s important to understand that prompt engineering should be an iterative process in which prompts are refined based on successive responses, ultimately learning through trial and error. A balance is needed between being specific enough to guide the model and being flexible enough to allow the model to engage in some manner of creativity.

Also important is providing sufficient context or examples along with the prompt such as background information, tone, or specifying the desired response format. Some scenarios the LLM must deal with have minimal context or no examples, so the prompt input must compensate for the lack of model training for that specific scenario.

Training cost

Training costs for LLMs are significant in terms of the expenditures for computational, hardware, power and cooling, networking, and environmental resources. Sophisticated AI models, such as modern LLMs, require bespoke data centers with tremendous computational power to train the actual models.

The hardware used for training LLMs is generally high-end, typically involving powerful GPUs, high-speed storage, larger memory, and extremely high bandwidth network devices. Modern LLMs often have billions of parameters, so processing them in a reasonable amount of time requires substantial computational resources along with the space, power, cooling, and other operational costs associated with large, high-performance data centers.

Rather than building their own data centers, some organizations use cloud services like AWS, Google Cloud, or Azure for training, which incurs ongoing financial costs. When an organization builds its own data center specifically to run AI workloads, the total cost can easily run into the hundreds of millions or billions of dollars.

As mentioned above, ongoing operational costs include the power and cooling necessary to run tens of thousands of GPUs for weeks or months at a time. As models are re-trained with new parameters and data, there is a periodic but ongoing cost to collect, store, and format the enormous amount of data needed to train LLMs.

Current research

Research in the last 10 to 15 years has focused on the application of ML models and learning algorithms to learn from a vast dataset, i.e., the internet, which is generally unstructured, often unlabeled, and not necessarily well-notated. Accuracy is the foundation for an LLM’s usefulness, so much of current research is focused on achieving better results from this dataset.

However, using the internet as the body of data for NLP can lead to unintended or undesired results simply because of the input’s unreliability. Hence, contemporary researchers have been focusing on finding methods to improve the accuracy of the results of LLM applications.

Also, a primary focus of current research is advancing statistical language processing to improve the interaction between a human and a computer. The idea is to make the computer better understand human prompts, and its responses are increasingly more developed and immediately relevant.

One significant development is the creation of specialized LLMs for specific fields, such as the GatorTronGPT model designed for biomedical natural language processing, clinical text generation, and healthcare text evaluation. “Owl” was introduced in 2023 as an LLM explicitly designed for IT operations.

Recent advancements in multimodal capabilities are being explored, where LLMs like OpenAI’s GPT-4 are trained on text and sensory inputs like images. Researchers aim to ground the models more firmly in human experiences, potentially leading to a new level of understanding and new applications for LLMs.

However, researchers continue to debate fundamental questions about the nature of understanding in LLMs. Some question whether LLMs truly “understand” language or if they’re simply proficient in next-word prediction without a true grasp of meaning. However, this presupposes an understanding of the nature of knowledge and how meaning can be derived from text. Therefore, the debate goes beyond LLMs to a broader context of the relationship between humans and AI.

Current uses

LLMs and NLP, in particular, have burgeoned into a field with diverse applications across various technical and non-technical domains.

Email providers have long used NLP for spam detection to distinguish between spam and non-spam emails programmatically. Furthermore, most word processing software, text editors, and search engines use some form of autocomplete, spelling, and grammar correction.

LLM algorithms have become valuable to identify topics within a body of text, aiding in organizing and categorizing content. This has become a major use case among many who use LLMs on a daily basis. Beyond organizing and summarizing content, modern LLMs are now being used to generate coherent and contextually relevant text based on queries and requests. This can include prose, poetry, computer programming code, and many other text types.

The widely popular ChatGPT is an example of this focus, but we have also seen the proliferation of very sophisticated NLP-powered chatbots to facilitate automated customer service and interactive experiences.

There are a variety of LLMs created for specific fields. However, the most widely used and well-known models today are GPT-4 by OpenAI, LLaMA by Meta, PaLM by Google AI, BERT, also developed by Google, and Amazon Q by Amazon. These LLMs, along with the many not mentioned here, each have their own strengths and weaknesses. Therefore, one model may be more suitable for a given scenario than another.

Applications to IT operations

LLMs are beginning to provide various benefits to IT operations, and as both LLMs and how they are integrated into the IT tech stack mature, their applications to IT operations will expand.

We’ve seen chatbots and virtual assistants used by IT ticketing systems to interact with an end-user and facilitate automated information gathering to assist in subsequent troubleshooting. As LLMs become more sophisticated and are trained on IT domain data, we’ll see them used more extensively in these types of automated support applications.

One of the earliest applications of LLMs to actual operations has been to search large databases of documentation, summarize texts, and even generate code upon request. This will quickly evolve into an LLM, also updating documentation, managing knowledge and configuration bases, and maintaining code versions.

Additionally, with this growing body of training data, LLMs will perform code reviews to search for potential errors and suggest more efficient ways to consolidate lines of code. This can significantly increase the speed to deployment, decrease errors, and improve operations and the speed of deployment overall.

In terms of data analysis, consider the sheer volume of logs and telemetry in a modern tech stack. LLMs are already being used in conjunction with underlying querying engines to transform data into a unified format and structure and perform advanced data analysis on what otherwise is very diverse data in type, scale, and format.

For example, an LLM can interrogate a database of IT domain information such as device logs, application code, network flow logs, device metrics, cloud information, information from ticketing systems, and so on. By representing all this data in a unified structure, a model can be applied to analyze patterns and trends, predict failures, identify possible security breaches, and generally assist in the daily operations of engineers, developers, security analysis, and so on.

In daily operations, once a model is trained on domain-specific data, we will see the automated generation of scripts and workflows to diagnose and resolve IT incidents. Intelligent automated remediation has always been a goal for operations professionals, and LLMs will play a major role in getting there.

Conclusion

Large language models have moved the needle forward for AI’s practical, daily application. Machine learning, neural networks, transformers, and so on have been part of an iterative journey to revolutionize how humans interact with data.

Today, LLMs are focused on the interaction between computers and humans using natural human language to enable a natural, simplified, and enhanced human interaction with data, the lifeblood of our modern world. This is completely changing the dynamic of how humans interact with computer systems and, in a more existential sense, our interaction with information.

Significant time, research, attention, and resources are being spent on the development and applications of this technology, so the progress of LLMs and NLP is far from finished. As the capabilities and complexities of these models expand, so will the applications and use cases for them.

And lastly, as a society, we must also consider the risks and effects on people and culture as a whole, so it’s essential for the research community in academia, business, and regulatory bodies to work together in navigating the advancements in LLMs.