Summary

At this year’s AWS Summit in New York, agentic AI took center stage with Amazon’s launch of Bedrock AgentCore — a powerful step toward turning AI prototypes into scalable, production-ready applications. From low-code workflows to turnkey infrastructure, a new generation of tools is enabling teams of all skill levels to build, deploy, and monitor AI agents faster than ever. In this post, learn about the shift from experimental AI to enterprise-ready systems, and why network intelligence is the glue holding it together.

Last week’s AWS Summit in New York put agentic AI squarely in the spotlight. The headline launch, Amazon Bedrock AgentCore, was all about delivering a turnkey infrastructure stack that lets development teams move an AI-agent proof-of-concept into a secure, scalable service in days instead of months.

And that was a central theme for this year’s AWS Summit among many of the vendors: helping folks at any level of engineering skill easily and quickly transition from ideation to prototype and finally to production-ready AI apps.

Breaking down barriers

For years, if you had an idea for an application, you’d need to either hire a developer (or team of developers) or have enough skill to build it yourself. And as anyone who has worked in an enterprise IT organization knows, getting the code down is just part one. There are also security considerations to consider, including integration with existing tools and workflows, ongoing support, licensing, and more.

Building an application requires skill, effort, and usually lots of money.

It used to be that you needed a team of developers, data engineers, data scientists, and infrastructure engineers, among others, to build a useful and functional AI application. Today, however, that’s all changing as many of the barriers of entry are being removed, allowing almost anyone to dive in and bring their ideas to life.

Booth after booth at the AWS Summit in New York featured a presentation of some tool or platform that involved AI agents taking natural prompts and turning them into usable code. And this was more than vibe coding with ChatGPT.

A new cohort of Agents-as-a-Service

What we’re seeing is an entire cohort of companies, some that have been around for a generation, and some brand new, offering agentic-AI workflow solutions to automate and simplify building whatever you want in a low-code or no-code environment.

We’ve had some examples of this for some time, with platforms like n8n, CrewAI, LangGraph, and others. However, this year’s AWS Summit seemed to be a rallying event for dozens, if not hundreds, of additional companies to take advantage of AI agents and LLMs as-a-service.

Amazon Bedrock AgentCore is a notable example of a step in this direction from a large, well-known vendor. Still, there were also numerous examples from companies I had never heard of, including Glean, Digitate, Automox, Camunda, and others.

What we have now is a growing marketplace of AI agents, tunable models, libraries, and communities that anyone, regardless of technical ability, can tap into. And this is important because, as much as AI agents, LLMs, and their workflows make building new apps easier, you’re going to need to start by building a complex AI workflow in the first place.

Why this matters

Historically, organizations that built an AI agent in a hackathon or as a proof-of-concept spent the next quarter recreating basics, such as session stores, IAM roles, and API wrappers, before the first customer ever touched the system. Many vendors providing AI agents as a service ship those pieces either pre-built as templates or as completely managed services, thereby shrinking the time-to-market and allowing teams to concentrate on domain logic rather than plumbing.

AgentCore, in particular, offers agent-initiated API calls that are scoped by default. Developers map a workload identity to specific AWS services or SaaS apps, and AgentCore stores user consent tokens in its own vault. This design addresses many of the least-privileged requirements we use in typical software development without requiring the addition of custom middle-tier code.

With the increasing popularity and growth of resources and communities like Hugging Face, engineers are becoming more selective in choosing models for their workflows. Rather than building everything around a single foundation model, such as GPT or Claude, this new class of AI vendors is much more framework-agnostic, offering the ability to support any foundation or local model. That means you can combine Claude, Llama, and proprietary models in the same workflow.

Why network intelligence matters more than ever

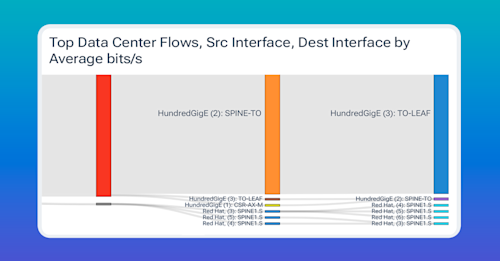

Even with the infrastructure baked in, AI agents still depend on reliable network paths, especially when you’re stitching together S3 Vectors, OpenSearch, on-prem databases, third-party SaaS, and more. This is where the Kentik Network Intelligence Platform comes in, providing traffic-level insights that help teams maintain efficient, incident-free, and cost-effective paths.

For example, Kentik ingests AWS VPC flow logs alongside on-prem and multi-cloud telemetry, building a unified view of how agent traffic traverses Direct Connect, Transit Gateway, or public internet routes.

Since hybrid and distributed systems are now the standard operating procedure, Kentik can highlight high-volume inter-AZ transfers or unnecessary egress, which can enable network teams to adjust routing policies or insert storage endpoints that reduce data-movement costs.

And though I saw a huge interest in running AI workloads in Amazon Bedrock specifically, it was clear that the industry is embracing interoperability among diverse services and tools to make the AI magic happen. For engineers managing these production systems, that means performance baselining and monitoring by running continuous synthetic tests against key endpoints – for Amazon, that could be Bedrock inference APIs, S3 Vectors buckets, or internal REST services – so latency spikes, packet drops, etc., are caught before they degrade application performance and the user experience.

Key takeaways

AI, specifically in the form of LLMs, agents, and the systems that tie everything together, isn’t a science experiment anymore. Rather than asking, “Can AI help me build my app?” folks are now asking, “How fast can we go from demo to production?”

As we continue down this road, I suspect we’ll keep finding new areas to improve and new problems to solve, just as we would with any new technology. Even at last week’s AWS Summit, I spoke with technologists asking questions like:

- How can we avoid being locked into a single framework?

- Which team is responsible for monitoring and maintaining the underlying workflows?

- How do we combine data lineage, application observability, and traditional network visibility into a single view?

Agentic AI is rapidly evolving from an experimental capability to an essential one. With an entire cohort of new companies delivering operational scaffolding and Kentik offering network-level intelligence, organizations can deploy sophisticated agents that are not only capable but also secure, performant, and cost-efficient – ready for real-world demand on day one.