Summary

When transitioning from physical infrastructure to the cloud, it’s easy to think that your networks will instantly be faster, more reliable, and less costly overnight. As it turns out, there’s more to it than that.

When transitioning from physical infrastructure to the cloud, it’s easy to think that your networks will instantly be faster, more reliable, and produce windfalls of cost savings overnight. Unfortunately, this wishful line of thinking fails to account for some of the complexities of cloud networking and is one of the biggest drivers of the cloud deployment mistakes we see.

Cloud networking often introduces new concepts to teams, such as VPCs, cloud interconnects, and multiple availability zones and regions, and this lack of familiarity can lead to questionable implementation. On top of this, these networks connect with other clouds and the internet, forming hybrid and multi-cloud architectures. Combined with the rapid pace of deployment and lack of visibility into how cloud resources are being used, it’s easy to make costly mistakes.

After years of helping clients work through these lessons, we’ve compiled this list to help you avoid making the same mistakes others have when transitioning to the cloud.

Mistake #1: Duplicate services and unknown dependencies

This mistake commonly happens when multiple, siloed teams jump into the cloud without giving much thought to shared architecture. Imagine separate teams building separate applications in the cloud. Each team spins up cloud resources such as compute, storage, network components, etc. Many of them are actually reusable and shareable. Some are obvious standard services like DNS, databases, load-balancers, etc.

But it isn’t just duplicating cloud resources. We also see duplication of custom-developed microservices that perform precisely the same function. With all the teams running fast in parallel, it’s not hard to see how they may reinvent the wheel repeatedly. Soon, the cloud environment becomes a tangled web of interdependencies. Without some kind of visibility, including brittle architecture, wasted development effort, and massive cloud overspending.

Here is a scenario to illustrate:

Team A sets up a DNS service for their apps. Not knowing about Team A’s DNS service, Team B creates a duplicate DNS service for their own app. Now their organization is paying twice for instances that could easily be collapsed into shared infrastructure.

Imagine that a third team (Team C) also needs a DNS service for their app. They begin using Team B’s DNS without their knowledge. Sometime later, Team B learns about Team A’s DNS service, so they start using it and shut down their own. Now Team C’s app has an outage because a service it depended on disappeared without warning.

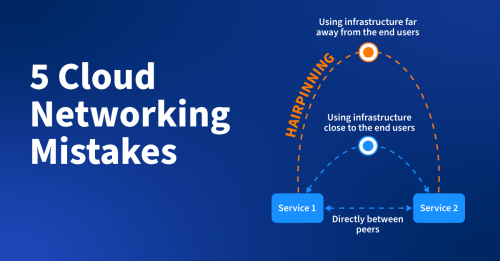

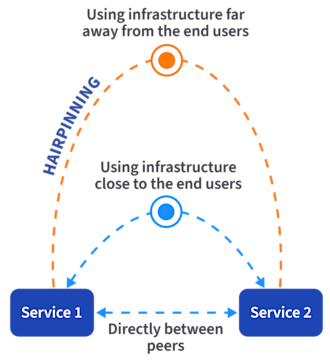

Mistake #2: Traffic or request hairpinning

What is hairpinning? It happens when services that should communicate over short, fast, and cheap network paths end up communicating over long, expensive paths with lots of latency. There are multiple causes and flavors of this problem. Let’s discuss two common examples here.

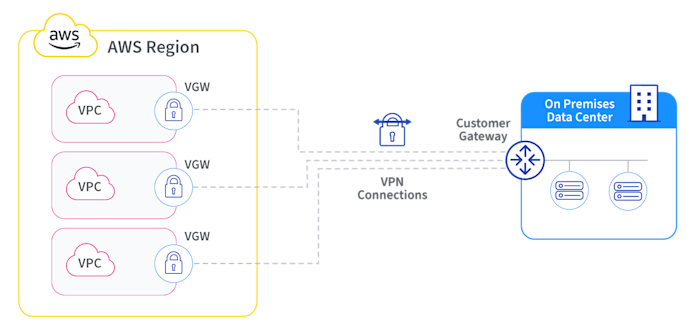

In the first scenario, imagine two services sitting in separate zones within a physical data center. Through poor architecture choices or simple IP routing misconfiguration, the communication path between those services traverses cloud interconnects and VPCs, instead of the local data center network fabric. Every time those services communicate, they’re experiencing much higher latency and racking up expensive per-GB cloud data transfer charges. The inverse is also possible: two cloud-deployed services communicating via a network path that traverses a physical data center.

In the second scenario, imagine a service chain consisting of a web front-end, application server, and database backend. A DevOps team has migrated the application server to the cloud, but the web front-end and database are still in the legacy data center. Now every web request results in a chain of calls that traverse cloud interconnects twice, resulting in poor performance and cost exposure.

Without visibility, scenarios like these can persist indefinitely.

Mistake #3: Unnecessary inter-region traffic

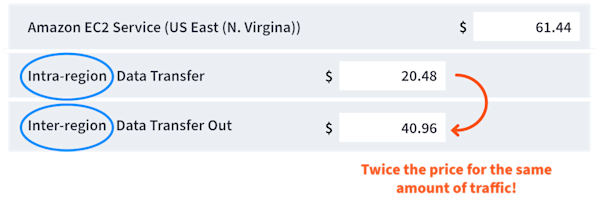

It’s relatively common knowledge that most cloud components are priced using a metered, pay-as-you-go model. But the pricing details of specific components often get lost in the weeds. Did you know that the per-GB cost of inter-region data transfer is significantly more expensive than intra-region data transfer? On the AWS pricing calculator, inter-region data transfer is twice the price of intra-region data transfer! It’s even more dramatic on Google Cloud, with egress between regions almost 10x as expensive as egress within regions.

Cloud architects and developers build their applications or infrastructures in a way that generates significant, unnecessary traffic between regions, potentially causing a dramatic impact on the bottom line.

To streamline cloud network costs, we recommend the following three steps:

-

Identify: Get a view of significant contributors to inter-region internet egress traffic. Answer questions such as: What applications are causing that big bandwidth bill? Which application teams are responsible for this traffic? And, are we exposed to data transfer charges that are unnecessary?

-

Relocate: Evaluate workloads to move within the infrastructure. Replace workloads to minimize inter-region communication.

-

Consolidate: Carefully examine all workloads, understand the network traffic generated, and combine what can be merged to save more resources.

Mistake #4: Accessing cloud services over the internet from local VPCs

Everyone building apps in the cloud knows you can supercharge efforts by using native cloud services — e.g., AWS S3, Google Cloud Pub/Sub, Azure AD — as building blocks rather than starting from scratch. These services are convenient and save time but come with per-hour, per-gigabit, or per-transaction price tags. It’s also important to remember that there are data egress costs that, at scale, can add up quickly. The good news is that savvy operators can considerably decrease these costs.

Some background helps to understand these costs better. First, cloud providers make their services accessible to the internet using IP address ranges representing services running in a single region. For example, S3 service running in the AWS us-west-1 region is advertised to the internet from the 3.5.160.0/22 address range. To use these services, clients resolve DNS names, like [sms.eu-west-3.amazonaws.com] (http://sms.eu-west-3.amazonaws.com/), to the IP ranges where services are hosted. They then send traffic along to the IP address returned from the query.

When cloud engineers stand up new VPCs, they must also set up routing rules that determine how traffic forwards toward any non-local destinations. Traffic bound to the internet always follows a default route that points to a gateway device. Traffic crossing such a device is defined as internet egress traffic. This includes traffic heading to the internet only to reach cloud services — even those in the same region or zone as your VPC instances. These costs add up over time with sufficient volume.

Luckily, most cloud providers released features commonly referred to as “endpoint services.” They’re designed to keep this traffic away from the public internet, reducing both data transfer costs and security risks associated with sending traffic over the internet. Endpoint services allow users to configure local network interfaces with private IP addresses inside their VPC subnets.

These interfaces act as proxies for any traffic destined toward the endpoint services configured. The result is that traffic to these services stays local and private with lower egress pricing.

For example, traffic from a VPC in us-east-1 to an AWS service hosted in us-east-1 will cost $0.02/GB. Not a big deal at the scale of most companies. But when transferring 50 TB per month to S3, these costs can quickly surpass $1000/month. You’ll decrease your costs by setting up a VPC endpoint to direct this traffic to S3. VPC endpoint pricing includes $0.01/hour per interface; any data egressed over that is charged at $0.01/GB. The same 50 TB egressed to S3 over an AWS PrivateLink endpoint will cost approximately $520/month — a 50% savings over standard internet egress charges.

Read more about AWS VPCs in The Network Pro’s Guide to the Public Cloud.

Mistake #5: Using default internet traffic delivery

Using your cloud provider’s default internet egress is definitely straightforward, but the costs can add up quickly, especially when your business needs to deliver tons of bits. Costs can be more than ten times as expensive as traditional IP transit on a per-GB basis. Cloud migrations can result in a considerable billing surprise without considering and implementing other traffic delivery options.

A simple first step is to inventory which apps deliver traffic to the internet and how much. Teams may deploy new apps using default internet egress because they aren’t aware of the cost impact. Discovery is critical for cost control.

Here are some traffic delivery options that can reduce internet egress charges:

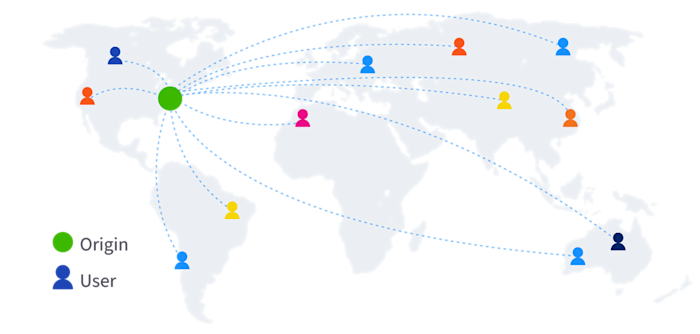

Leverage CDNs (content delivery networks): These services can cache frequently requested objects on nodes distributed all worldwide, serving the traffic to end users at a much lower per-GB cost than serving those users directly from your origin servers, and with much better performance (i.e., lower latency). Cloud providers offer their own built-in CDN services, along with third-party providers.

In-app packaging for mobile: For cloud services that interact with mobile apps, consider packaging large, relatively static objects as part of the app instead of serving them over the network.

Private egress: For services generating lots of traffic, it can be more economical to backhaul internet egress over cloud interconnects to a PoP that’s well-connected to lots of relatively cheap IP transit. This is attractive for networks with PoPs and transit in place for traditional physical data centers.

An increasingly complex future for multi-cloud ops teams

As organizations adopt hybrid, multi-cloud environments, network and infrastructure teams will face serious blind spots with siloed tools impacting their ability to identify and troubleshoot problems.

Public cloud and cloud-native application infrastructure have introduced incredible new ways to build and deploy applications (including cloud-native network functions). Along with this convenience has come the need to understand and operate complex virtual networks, service mesh architectures, hybrid, multi-region, and multi-cloud networking.

To learn even more about mastering the operational aspects of multi-cloud deployments, please download our guide, Network and Application Observability for Multi-Cloud Ops Teams.