Beyond Hadoop

Summary

As the first widely accessible distributed-computing platform for large datasets, Hadoop is great for batch processing data. But when you need real-time answers to questions that can’t be fully defined in advance, the MapReduce architecture doesn’t scale. In this post we look at where Hadoop falls short, and we explore newer approaches to distributed computing that can deliver the scale and speed required for network analytics.

Clustered computing for real-time Big Data analytics

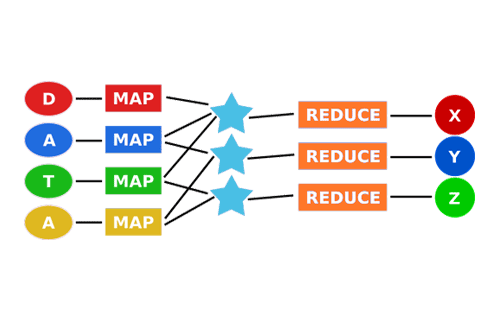

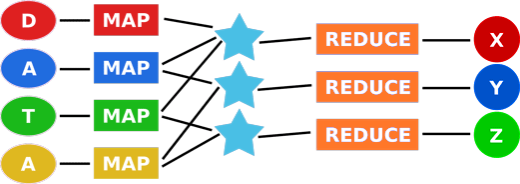

The concept of parallel processing based on a “clustered” multi-computer architecture has a long history dating back at least as far as Gene Amdahl’s work at IBM in the 1960s. But the current epoch of distributed computing is often traced to December of 2004, when Google researchers Jeffrey Dean and Sanjay Ghemawat presented a paper unveiling MapReduce. Developed as a model for “processing and generating large data sets,” MapReduce was built around the core idea of using a map function to process a key/value pair into a set of intermediate key/value pairs, and then a reduce function to merge all intermediate values associated with a given intermediate key. Dean and Ghemawat’s work generated instant buzz and led to the introduction of an open source implementation, Hadoop, in 2006.

As the first efficient distributed-computing platform for large datasets that was accessible to the masses, Hadoop’s initial hoopla was well deserved. It has since gone on to become a key technology for running many web-scale services and products, and has also landed in traditional enterprise and government IT organizations for solving big data problems in finance, demographics, intelligence, and more.

Hadoop and its components are built around several key functionalities. One is the HDFS filesystem, which allows large datasets to be distributed over many nodes. Another is the algorithms that “map” (split) a workload over the nodes, such that each is operating on its local piece of the dataset, and “reduce” to aggregate the results from each piece. Hadoop also provides redundancy and fault tolerance mechanisms across the nodes. The key innovation is that each node operates on locally stored data, eliminating the network bottleneck that constrained traditional high-performance computing clusters.

The limits of Hadoop

Hadoop is great for batch processing large source datasets into result sets when your questions are well defined and you know ahead of time how you will use the data. But what if you need fast answers to questions that can’t be completely defined in advance? That’s a situation that’s become increasingly common for data-driven businesses, which need to make critical, time-sensitive decisions informed by large datasets of metrics from their customers, operations, or infrastructure. Often the answer to an initial inquiry leads to additional questions in an iterative cycle of question » answer » refine until the key insight is finally revealed.

The problem for Hadoop users is that the Hadoop architecture doesn’t lend itself to interactive, low latency, ad-hoc queries. So the iterative “rinse and repeat” process required to yield useful insights can take hours to days. That’s not acceptable in use cases such as troubleshooting or security, where every minute of query latency means prolonged downtime or poor user experience, either of which can directly impact revenue or productivity.

One approach that’s been tried to address this issue is to use Hadoop to pre-calculate a series of result sets that support different classes of questions. This involves pre-selecting various combinations of dimensions/columns from the source data, and collapsing that data into multiple result sets that contain only those dimensions. Known as “multidimensional online analytical processing” (M-OLAP), this approach is sometimes referred to more succinctly as “data cubes.” Relatively fast queries can be asked of the result sets, and the resulting performance is certainly leaps and bounds better than anything available before the advent of big data.

While the use of data cubes boosts Hadoop’s utility, it still involves compromise. If the source data contains many dimensions, it’s not feasible to generate and retain result sets for all of the possible combinations. The result sets also need to be continually regenerated to incorporate new source data. And the lag between events and data availability can make it difficult to answer real-time questions. So even with data cubes, Hadoop’s value in time-dependent applications is inherently constrained.

Big Data in real time

Dremel’s results are truly impressive. It can execute full scan queries over billions of rows in seconds to tens-of-seconds — regardless of the dimensionality (number of columns) or cardinality (uniqueness of values within a column) — even when those queries contain complex conditions like regex matches. And since the queries operate directly on the source data, there is no data availability lag; the most recently appended data is available for every query.

Because massively parallel disk I/O is a key prerequisite for this level of performance, a significant hardware footprint is required, with a price tag higher than many organizations would be willing to spend. But when offered as a multi-tenant SaaS, the cost-per-customer becomes quite compelling, while still providing the performance of the entire cluster for any given query.

Post-Hadoop NetFlow analytics

Dremel proved that it was possible to create a real-world solution enabling ad hoc querying at massive scale. That’s a game-changer for real-time applications such as network analytics. Flow records — NetFlow, sFlow, IPFIX, etc. — on a decent-sized network add up fast, and Hadoop-based systems for storing and querying those records haven’t been able to provide a detailed, real-time picture of network activity. Here at Kentik, however, we’ve drawn on many of the same concepts employed in Dremel to build our microservice-based platform for flow-based traffic analysis. Called Kentik Detect, this solution enables us to offer customers an analytical engine that’s not only powerful and cost-effective but also maintains real-time performance across web-scale datasets, a feat that is out of reach for systems built around Hadoop. (For more on how we make it work, see Inside the Kentik Data Engine.)

The practical benefit of Kentik’s post-Hadoop approach is to enable network operators to perform — in real-time — a full range of iterative analytical tasks that previously took too long to be of value. You can see aggregate traffic volume across a multi-terabit network and then drill down to individual IPs and conversations. You can filter and segment your network traffic by any combination of dimensions on-the-fly. And you can alert on needle-in-the-haystack events within millions of flows per second. Kentik Detect helps network operators to uncover anomalies, plan for the future, and better understand both their networks and their business. Request a demo, or experience Kentik Detect’s performance for yourself by starting a free trial today.